Join us on an alphabetical journey through the research themes and key concepts behind our work!

A is for Acoustics

Sound is all around us: it accompanies everything we do. Human beings are both sources and the receivers of sounds. Acoustics is the branch of science and engineering concerned with all aspects of sound, including its generation and transmission, its control and use, and its effects on people and the environment.

Vitruvius presenting De Architectura to Augustus. From Vitruvius on Architecture (1684) by Thomas Gordon Smith. Image credit: Sebastian Le Clerc, public domain, via Wikimedia Commons

Vitruvius presenting De Architectura to Augustus. From Vitruvius on Architecture (1684) by Thomas Gordon Smith. Image credit: Sebastian Le Clerc, public domain, via Wikimedia Commons

The scientific study of sound dates at least as far back as the 6th century BCE, with experiments by Greek philosopher and mathematician Pythagoras into harmonics and vibrating strings [1]. Several centuries later, the Roman engineer and architect Vitruvius (d. 15 BCE) is credited with the first formal treatment of architectural acoustics in his multi-volume work De Architectura, in which he discusses sound propagation in theatres [2].

The use of the term “acoustics” to define a branch of science came much later. Although the word had been used in the context of sound previously [3], the definition is usually attributed to physicist and mathematician Joseph Sauveur, who in 1701 proposed a new field of study called acoustique. He described his new discipline as “a science superior to music”, because it encompassed all sound, not simply music and harmonics [4].

The use of the term “acoustics” to define a branch of science came much later. Although the word had been used in the context of sound previously [3], the definition is usually attributed to physicist and mathematician Joseph Sauveur, who in 1701 proposed a new field of study called acoustique. He described his new discipline as “a science superior to music”, because it encompassed all sound, not simply music and harmonics [4].

When we think about acoustics the first thing that springs to mind might be architectural acoustics, and the optimisation of indoor sound. However, modern acoustics is a multidisciplinary field, encompassing physics, material science, engineering, psychology, physiology, music, architecture, neuroscience, environmental science and mathematics. Some of the many other branches of acoustics include:

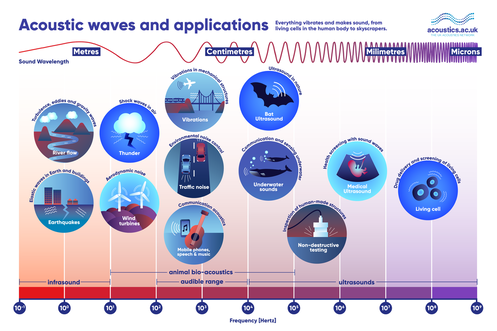

Image created by UK Acoustics Network members, reproduced with permission.

Image created by UK Acoustics Network members, reproduced with permission.

Physical acoustics: Physical acoustics overlaps with other fields of acoustics such as engineering and medical imaging. It also encompasses sound beyond the human audible range. Frequencies below the audible are called infrasound. Many natural phenomena such as earthquakes and thunder generate infrasound. Higher frequency sound is ultrasound, whose uses in medical imaging are well known. There are many other interesting physical acoustic phenomena, such as the photoacoustic effect: where sound is generated by the interaction of light with matter.

Musical acoustics: This is a broad field encompassing the design of musical instruments, building acoustics, the human perception of sound and music, and music recording and reproduction.

Psychoacoustics and physiological acoustics: These include the study of people's perception of sound and its physical and pshychological impact on us, and the physiology of hearing and hearing-loss.

Underwater acoustics and oceanography: The study of underwater acoustics and sonar has military and commercial applications, while in marine biology, acoustics can lead to a better understanding of animal communication, and the effect of human-generated noise on marine animals.

Environmental noise control: We know that exposure to loud noise can cause hearing loss, but this is not the only adverse effect. Noise can reduce our quality of life, and even our life expectancy, causing an estimated 12,000 premature deaths in Europe each year [7]. For this reason, noise reduction and control is an extremely active branch of acoustics.

Musical acoustics: This is a broad field encompassing the design of musical instruments, building acoustics, the human perception of sound and music, and music recording and reproduction.

Psychoacoustics and physiological acoustics: These include the study of people's perception of sound and its physical and pshychological impact on us, and the physiology of hearing and hearing-loss.

Underwater acoustics and oceanography: The study of underwater acoustics and sonar has military and commercial applications, while in marine biology, acoustics can lead to a better understanding of animal communication, and the effect of human-generated noise on marine animals.

Environmental noise control: We know that exposure to loud noise can cause hearing loss, but this is not the only adverse effect. Noise can reduce our quality of life, and even our life expectancy, causing an estimated 12,000 premature deaths in Europe each year [7]. For this reason, noise reduction and control is an extremely active branch of acoustics.

|

|

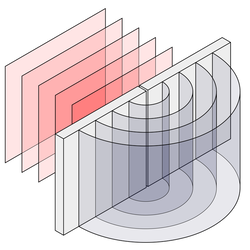

Researchers in the Mathematics of Waves and Materials group apply mathematical modelling to acoustics. Research themes include the design of acoustic metamaterials and phononic crystals: materials whose properties are determined by their structures, rather than their chemical composition. Acoustic metamaterials have structural elements that are smaller than the wavelengths of the sounds they are designed to interact with. This leads to properties that are not found in conventional materials. Other areas of acoustical research within the group include aero- and hydroacoustics: the study of the noise generated by fluid flows both on their own (jet noise) and when interacting with solid boundaries (trailing edge noise) in air and water, and the interaction of soundwaves with materials, including nanofibrous materials.

|

2020-2021 is the International Year of Sound, a global initiative to highlight the importance of sound and acoustics in society. You can find learning resources and events from acoustics organisations around the world on the Year of Sound website.

[1] Zhmud, L. (2012) "8: Harmonics and Acoustics in Pythagoras and the Early Pythagoreans", Oxford Scholarship Online

[2] Maconie, R. (2005) "Musical Acoustics in the age of Vitruvius", Musical Times; London, 146: 75-82.

[3] Dostrovsky, S. (2008) "Sauveur, Joseph", in Complete Dictionary of Scientific Biography vol. 12, New York, NY: Charles Scribner's Sons, 127-129.

[4] Fix, A. (2015) "A Science Superior to Music: Joseph Sauveur and the Estrangement between Music and Acoustics", Phys. Perspect., 17: 173–197.

[5] Rossing, T. D (2014) "A Brief History of Acoustics" in Springer Handbook of Acoustics. New York, NY: Springer, 9-24

[6] Rossing T.D. (2014) "Introduction to Acoustics" in Springer Handbook of Acoustics. New York, NY: Springer, 1-6

[7] European Environment Agency, Environmental noise in Europe — 2020, EEA Report No 22/2019

[2] Maconie, R. (2005) "Musical Acoustics in the age of Vitruvius", Musical Times; London, 146: 75-82.

[3] Dostrovsky, S. (2008) "Sauveur, Joseph", in Complete Dictionary of Scientific Biography vol. 12, New York, NY: Charles Scribner's Sons, 127-129.

[4] Fix, A. (2015) "A Science Superior to Music: Joseph Sauveur and the Estrangement between Music and Acoustics", Phys. Perspect., 17: 173–197.

[5] Rossing, T. D (2014) "A Brief History of Acoustics" in Springer Handbook of Acoustics. New York, NY: Springer, 9-24

[6] Rossing T.D. (2014) "Introduction to Acoustics" in Springer Handbook of Acoustics. New York, NY: Springer, 1-6

[7] European Environment Agency, Environmental noise in Europe — 2020, EEA Report No 22/2019

B is for Boundary Conditions

Just as in high school mathematics or physics, where one imposes conditions on the solution to an ordinary differential equation (such as a simple harmonic oscillator), in continuum mechanics boundary conditions are imposed on the solutions to partial differential equations which represent mathematically the laws governing the generic behaviour of particular materials.

|

A familiar example is the dripping of a tap. As water slowly accumulates at the outlet of the tap, its surface forms a smooth near-spherical shape as a consequence of surface tension acting to minimize the exposed surface area. Eventually, it becomes energetically expedient for a drop to detach and fall away, and the process repeats. The partial differential equations in this context are the Navier-Stokes equations, and the boundary conditions are the surface tension at the interface between water and air, the pressure gradient or the flow rate within the tap, and also the contact conditions at the meeting point between water, air, and the tap itself. In general, the flow of water, or any Newtonian fluid, is a function only of the Reynolds number and the boundary conditions.

|

|

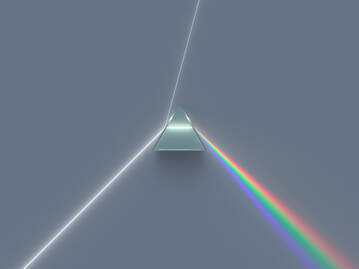

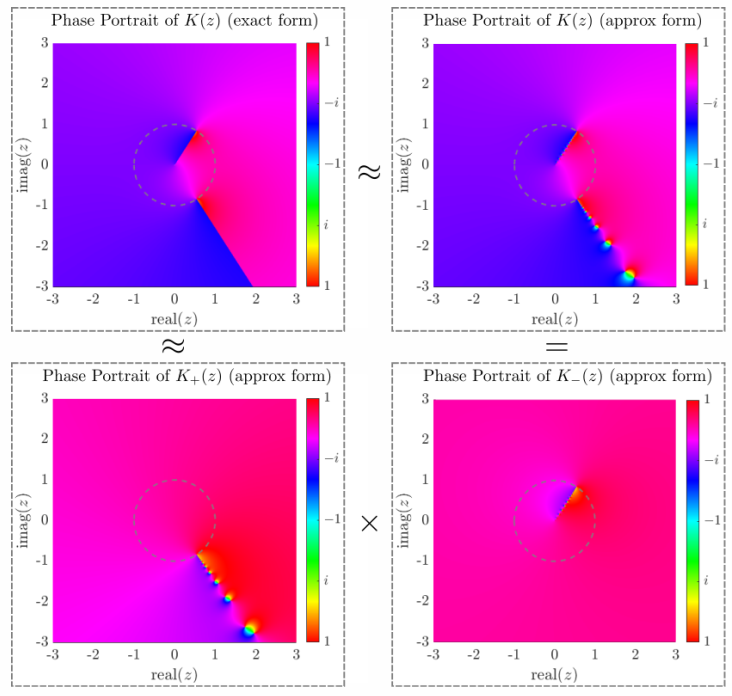

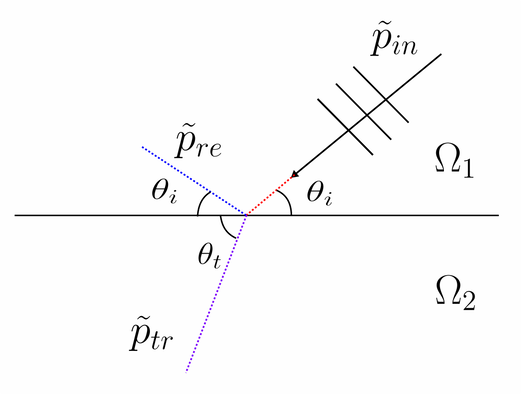

The refraction of a light ray as it passes from one medium into another can also be understood in terms of boundary conditions applied at the interface between the two media. Solving the Euler-Lagrange equation, one can show that \(\frac{sin \theta}{c}\) must be the same on either side of the boundary, where θ is the angle of incidence on one side and the angle of refraction on the other side, and c is the local speed of light in each medium. This result is known as Snell's Law, although it was described by Ibn Sahl almost 600 years before Snell was born [1].

|

The extent to which the full detail of the boundaries are incorporated within a mathematical model can depend on whether there is a practical need for a quantitative result in a particular case, for example, the stress on a rocket's nose cone, or the circulation of air in a hospital. If the intention of the modelling is mainly to understand the underlying phenomena in a more qualitative sense, then an idealized and representative form of the problem is often developed, typically involving simplifications in the form of assumptions about spatial symmetry, infinite extent, or smoothness of walls etc. Such simplifications are usually intended to allow a certain amount of progress with a purely analytical approach, or for testing of a computational approach at a proof of concept stage. Solving the fundamental equations in full generality may be impossible, but with restrictions on the possible solutions, e.g. an assumption of spherical symmetry, or taking an asymptotic limit in terms of a small parameter, the problem may become tractable. In effect, the simplifications can be interpreted as modifying the boundary conditions. Then the relevance of the idealized solution in the context of the full unsimplified problem will depend on whether the boundary conditions are amenable to the sort of simplifications discussed.

When assessing the robustness and accuracy of a new computational algorithm, certain well-studied and sometimes challenging combinations of boundary conditions are used, known as benchmark problems. These are problems for which the correct solutions are known, and the idea is to highlight any limitations of the algorithm, or any mistakes made in its implementation. For example, the lid-driven cavity is a standard benchmark problem in CFD (computational fluid dynamics) for the validation of numerical methods [2].

When assessing the robustness and accuracy of a new computational algorithm, certain well-studied and sometimes challenging combinations of boundary conditions are used, known as benchmark problems. These are problems for which the correct solutions are known, and the idea is to highlight any limitations of the algorithm, or any mistakes made in its implementation. For example, the lid-driven cavity is a standard benchmark problem in CFD (computational fluid dynamics) for the validation of numerical methods [2].

|

N. F. Morrison

|

[1] Rashed, R. (1990) "A Pioneer in Anaclastics: Ibn Sahl on Burning Mirrors and Lenses", Isis, 81(3): 464-491

[2] Hinch, E. J. (2020) "1: The Driven Cavity" in Think Before You Compute. Cambridge: Cambridge University Press, 3-8

[2] Hinch, E. J. (2020) "1: The Driven Cavity" in Think Before You Compute. Cambridge: Cambridge University Press, 3-8

C is for Composite Materials

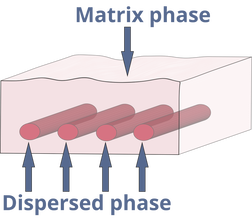

Composite materials can be defined as materials manufactured by the combination of two or more phases, to perform tasks that neither of the constituent materials can achieve alone. One phase is continuous and is called matrix while the other phase which is discontinuous is known as reinforcement or filler [1] (Figure 1). The matrix material binds the reinforcement and gives composite its net shape. The interfacial region between reinforcement and matrix in composites facilitate the transfer of forces between the relatively weak matrix and stronger reinforcement. A strong interfacial bond is necessary to enhance the mechanical properties of the composites.

|

Composite materials offer superior properties than their base materials. Humans have been making composites since ancient times. Adobe brick is an early example of a composite, made of straw and mud. Wood is also an example of composite material, made from long cellulose fibres held together by lignin. In recent times, the use of composite materials has increased in the aerospace, automotive, marine, defence, wind/energy and construction industries due to their light weight nature, high specific stiffness and strength, corrosion resistance, buoyancy, damage tolerance, impact resistance, and damping and sound absorbing properties.

|

|

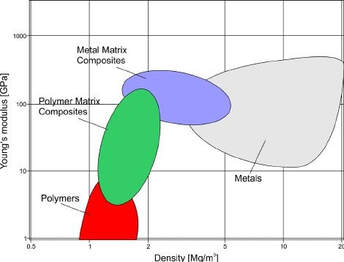

The Young’s modulus and density relation of metals, polymers and composites is presented in Figure 2, which shows that composite materials can offer better specific stiffness (E / ρ) than metals.

The properties of composite materials can be tailored by selecting appropriate combinations of matrix and filler/reinforcement. The volume fraction of reinforcements also plays an important role in determining the performance of composites. Based on the type of matrix material, composites are generally classified as polymer matrix composites (PMCs), metal matrix composites (MMCs), ceramic matrix composites (CMCs) and carbon matrix composites (CAMCs) |

PMCs are most widely used type of composites. They are further divided into different categories, namely: thermosets, thermoplastics and elastomeric composites. Thermoset matrices are used in high performance composite materials. They become rigid after curing and can be used at elevated temperatures without losing structural rigidity. Thermoplastics, unlike thermosets can be melted on heating. Examples of thermosetting polymer matrices include polyester, vinyl ester, epoxy, phenolic, cyanate ester, polyurethane, polyamide and bismaleimide. Polyethylene, polypropylene, polyamide, PEEK, thermoplastic polyamide, thermoplastic polyurethane, polycarbonate, PLA, polysulfone, polyphenylene sulphide are all examples of thermoplastic polymer matrices. Elastomers achieve their cross linking as a result of the vulcanization process. Rubber is a well-known elastomeric material. Metal, ceramic and carbon matrices are used for highly specialised objectives. For example, ceramics are used for high temperature applications, and carbon is used for applications which are expected to undergo friction and wear.

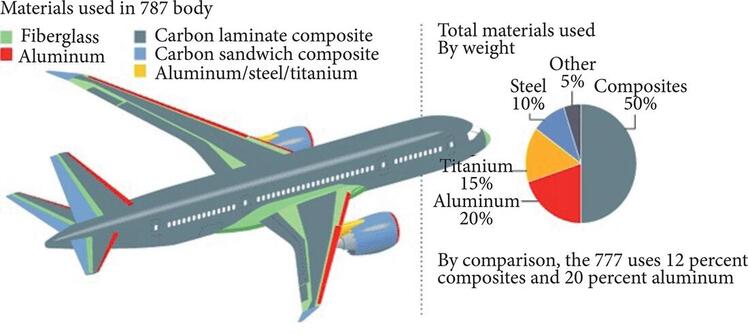

A variety of filler/reinforcement materials are available. Fibre reinforced composites are popular for high strength/weight and high modulus/weight ratios. Different fibres like glass, carbon, boron, ceramic, metal and natural fibres like flax, sisal and hemp are used as reinforcements in many applications. Composites made from carbon fibres are popular in aerospace, owing to their strength and weight reduction characteristics. Components made from carbon fibre reinforced composites can be five time stronger than 1020 grade steel, while utilising only 20% of the weight of steel. In aerospace applications, high strength and weight reduction are highly desirable properties, for structural integrity and fuel economy. The use of composite materials in Boeing 787 aircraft is depicted in Figure 3.

A variety of filler/reinforcement materials are available. Fibre reinforced composites are popular for high strength/weight and high modulus/weight ratios. Different fibres like glass, carbon, boron, ceramic, metal and natural fibres like flax, sisal and hemp are used as reinforcements in many applications. Composites made from carbon fibres are popular in aerospace, owing to their strength and weight reduction characteristics. Components made from carbon fibre reinforced composites can be five time stronger than 1020 grade steel, while utilising only 20% of the weight of steel. In aerospace applications, high strength and weight reduction are highly desirable properties, for structural integrity and fuel economy. The use of composite materials in Boeing 787 aircraft is depicted in Figure 3.

Composites manufactured by the combination of hollow microballoons (of glass, ceramic and plastic) and polymer matrix, generally known as syntactic foams, are popular for their acoustic, buoyancy, low moisture absorption and weight saving characteristics, and find their applications in marine and aerospace structures.

Numerous methods are available to manufacture composites, for example resin transfer moulding (RTM), vacuum infusion (VI), vacuum assisted resin transfer moulding, compression moulding and 3D printing. The selection of manufacturing method will mainly depend on the materials, part design and application.

Z. Yousaf

[1] Issac, M., Daniel O. I. (2006) Engineering mechanics of composite materials, New York: Oxford University Press

[2] Kickelbick, G. (2014) Hybrid Materials – Past, Present and Future, Hybrid Mater, 1: 39-51. DOI: 10.2478/hyma-2014-0001

[3] Alemour, B., Badran, O. & Hassan, M. R. (2019), A Review of Using Conductive Composite Materials in Solving Lightning Strike and Ice Accumulation Problems in Aviation, Journal of Aerospace Technology and Management, 11: e1919.

[2] Kickelbick, G. (2014) Hybrid Materials – Past, Present and Future, Hybrid Mater, 1: 39-51. DOI: 10.2478/hyma-2014-0001

[3] Alemour, B., Badran, O. & Hassan, M. R. (2019), A Review of Using Conductive Composite Materials in Solving Lightning Strike and Ice Accumulation Problems in Aviation, Journal of Aerospace Technology and Management, 11: e1919.

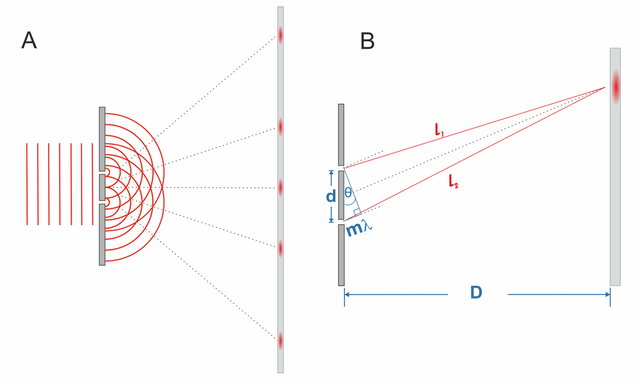

D is for Diffraction

|

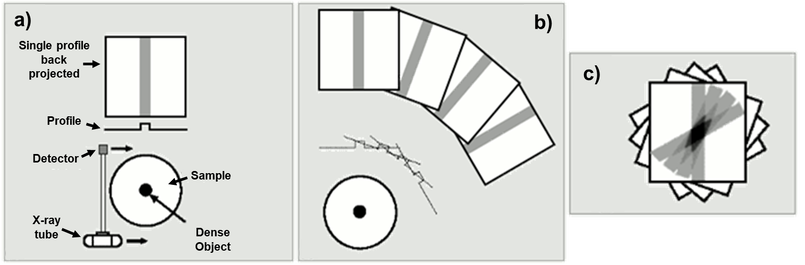

The term diffraction was coined in 1666 by Francesco Maria Grimaldi, after experimenting with light. He shone a light through a small hole in an opaque plate, and found that the light cast into the room covered an area larger than the hole it travelled through. This suggests that the light is not travelling in straight lines through the hole but is bent around the corners of the hole [1]. This can be seen in Figure 1, where the wave becomes curved as it passes through the slit, covering an area larger than the slit on the other side. The theory of diffraction can be extended beyond light passing by opaque surfaces, to any system of waves travelling past or around an object. Whether those waves be acoustic, elastic, electromagnetic or even oceanic waves - all can be diffracted.

|

|

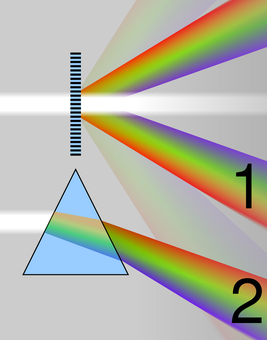

A practical use of diffraction can be seen in the use of diffraction gratings. Diffraction gratings are periodic structures, which split light into its component wavelengths. This phenomenon occurs as the diffraction of a wave is dependent its wavelength, hence the multiple wavelengths of visible light are diffracted at different rates as they pass through the grating. Therefore, after the light passes through the grating we can see each colour component. Diffraction gratings can be used as a tool in spectrometers - used to analyse the light emitted by a material. They can also be seen in items such as CDs, where the data engraved into the CD causes light to be diffracted when shined upon it.

The theory of diffraction is not to be confused with refraction, which is the warping of waves due to passing through a material, rather than around a material. In Figure 2, we can see light being diffracted by a grating in the top image, and light being refracted by a glass prism below.

|

|

Diffraction can not only be seen in light waves, but in any travelling wave front. For example, in the animation on the right, we see the diffracted wave field of a plane wave travelling from the right hand side, by what we call a half-plane. The half-plane is an infinitely thin line, starting at \(x = 0\), and extending to negative infinity, \(-\infty\), to the left. On the right hand side we see a field which is reflected by the edge of the half-plane and looks like a cylindrical plane wave. However, on the left hand side, where the wave is travelling past the half-plane, we see that the wave front is no longer constant due to the bending around the plane.

|

|

Scientists have gained a lot of insight about the world around us by the theory of diffraction. Most interesting is the occurrence of diffraction in the quantum world. Quantum physics is the study of matter and energy at the most fundamental level. In general, quantum theory considers the smallest of particles, and since these particles are the building blocks of the solid world around us, you would maybe expect that they behave like solid objects. However, US physicists Clinton Davisson and Lester Germer performed an experiment where electrons were fired in a stream through a small slit and their position recorded on the other side [2]. If each particle were to behave like a discrete solid object, we would expect to see the shape of the slit replicated in the image on the other side. However, it was found that the particles are actually diffracted and show wave-like behaviour, creating an image greater than the size of the slit when recorded.

T. White

[1] Cajori, F. (1899) A history of physics in its elementary branches: including the evolution of physical laboratories, New York: Macmillan

[2] Ball, P. (2018) Two slits and one hell of a quantum conundrum, Nature, 560 (7717):165-166

Animation credit: M. Nethercote

[2] Ball, P. (2018) Two slits and one hell of a quantum conundrum, Nature, 560 (7717):165-166

Animation credit: M. Nethercote

E is for elasticity

|

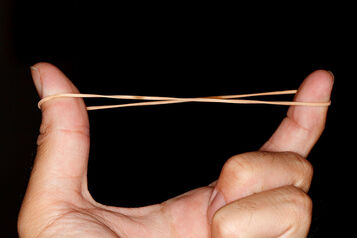

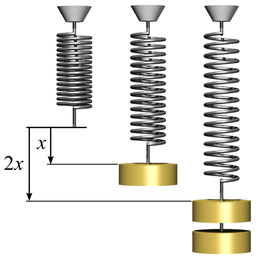

Elasticity is the property of a material that enables it to recover its original form when it is stretched, compressed, or otherwise deformed. All materials are elastic; stiff materials like steel and even diamond exhibit elasticity to a certain extent but the property is more easily visualised in materials that are highly deformable, e.g. rubber (elastic) bands or soft foams, see Fig. 1.

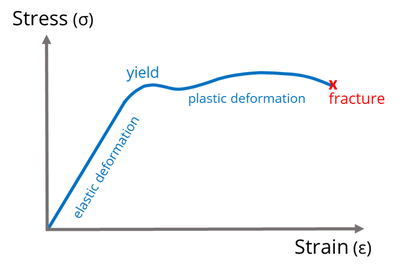

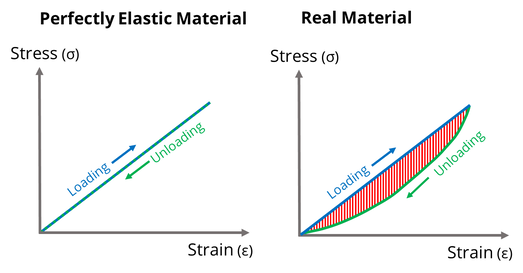

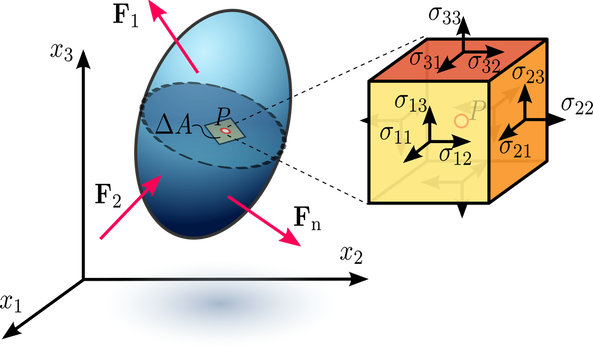

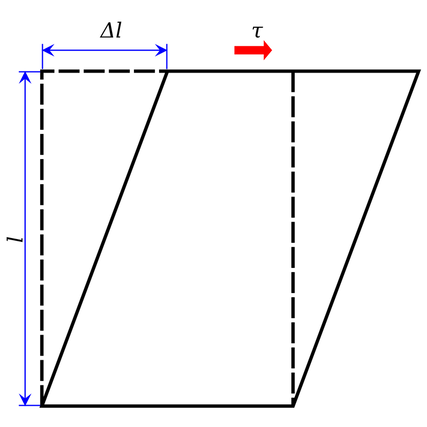

We usually describe the elasticity of a material by its relationship between force and extension, or equivalently by its stress-strain relationship, where stress is the force applied per unit area, and strain is the degree of deformation. With reference to Fig. 2, many materials exhibit a linear force- extension, or stress-strain relationship, and this type of

|

elasticity is therefore known as linear elasticity. The origin of this was the work of Robert Hooke, who in 1660 discovered that the extension of a spring is proportional to the weight that is applied to the spring, see Fig. 3. Obviously, if we keep adding weight to the spring, at some point it is no longer able to sustain this loading and it will break. Just prior to this in fact, the material from which the spring is made will have undergone so-called plastic deformation. This is where the atoms comprising the material are forced apart permanently. This is illustrated in Fig. 2, where we indicate that all of this behaviour is beyond the elastic limit. If weights (or more generally, forces) remain within the regime of elasticity then when the weight is removed, the spring will return back to its original rest state.

In reality, perfect elasticity is not possible, and according to the second law of thermodynamics there will always be some form of loss in the system, e.g. via friction or heat. This amount of loss is measured by the area between the load and unload curves in the stress-strain relationship, see Fig. 4. However we can get very close to perfect elasticity in many systems (the spring is a very good example) and especially so if we deform the material very slowly.

|

The elasticity of a material can be defined by its so-called elastic modulus, which is frequently termed Young’s modulus, named after Thomas Young and his work in the 19th century. When put under tension, as we have already discussed, many materials can be defined by their stress-strain relationship, i.e. \(\sigma = E e\) here \(\sigma\) is the stress applied in tension, \(e\) is the extensional strain and \(E\) here is Young’s modulus. The larger \(E\) is, the more stiff the material, given that it takes more stress (or force) to yield a fixed strain. In this context then, stretching your ear is much more straightforward (try it, but don’t pull too hard!) than stretching a piece of concrete or diamond.

|

To put some numbers on this, the Young’s modulus of a soft material such as rubber is around 6 times smaller than that of diamond. Therefore if a given stress of say 1MPa is applied to a piece of rubber it would yield a strain of around 10%, whereas the strain in diamond would be only 0.0001%. This is precisely why then you can visualise elasticity in soft tissue and rubber but not in very stiff materials such as diamond. This is also why there is a general perception that stiff materials such as wood, steel and diamond are not elastic. In reality they are, it is just that one cannot see this with the naked eye.

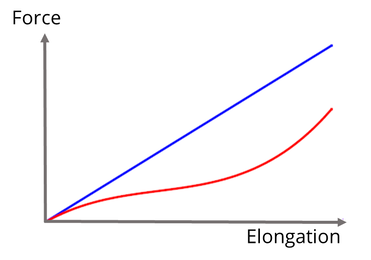

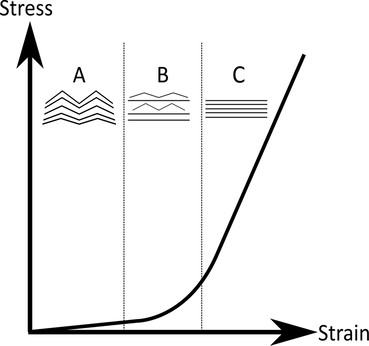

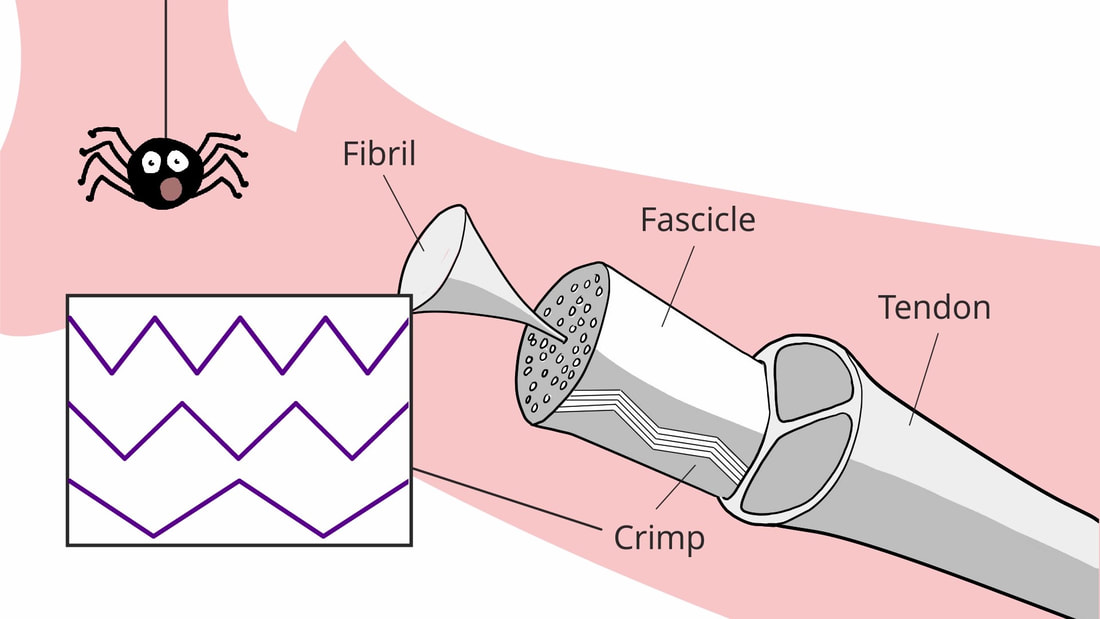

Now, not all materials are linear elastic for their entire regime of elastic deformation, although they usually are in some limit, close to the origin of the stress-strain curve. Indeed, we just described the example of soft tissue and this is very nonlinear, by which we mean that the material is not Hookean and does not exhibit proportionality between stress and strain. Its behaviour is defined by a more complex relationship between stress and strain. The study of such materials is known as nonlinear elasticity. Understanding this nonlinear relationship is extremely important and assuming that an elastic response is linear when it is not can be very dangerous. Consider for example the case of a bungee cord, responsible for the safe but exciting launch of someone over the side of a bridge into a ravine below. Typically bungee cords are nonlinear

|

elastic, exhibiting the kind of stress strain relationship depicted in the red curve of Fig. 5. As we can see, for a given force (weight) the bungee cord would extend much further than it would if it were linear elastic (the blue curve in Fig. 5), with its Young’s modulus assumed to be that which is measured in the small strain regime. If we are relying on the cord to stop us at the correct distance (depending on what is below us!) then we should ensure that we have a good understanding of the elasticity of the cord! More generally, nonlinear elasticity is critical for the design of many soft materials, e.g. polymers, isolation mounts, maxillofacial prosthetics, artificial tendons amongst others.

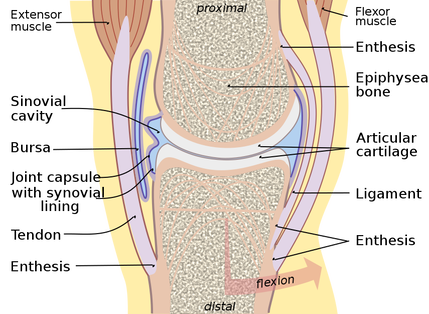

|

Understanding a material’s elastic response is fundamental to humankind. It plays a critical role in almost everything that we do from taking a walk (made possible by the elasticity of our tendons and ligaments), to driving a car (the elasticity of tyres is crucial, as well as the suspension of the car), to understanding how earthquakes (elastic waves that propagate through the earth) could potentially cause damage to entire cities. In the context of designing materials therefore, understanding and subsequently modifying the design of the elasticity of the material is something that is absolutely critical. Mathematical models are important for this purpose, particularly in order that we can reduce the amount of experimental testing and therefore reduce the impact on the environment. Modelling allows us to consider the impact of a huge parameter space of properties and how they impact on the overall elasticity of complex materials. Our research group has carried out a variety of work over the last decade in the areas of composite materials and elastic metamaterials.

W. J. Parnell

F is for Frequency

Frequency is a simple property, but one which underpins a vast amount both of science and of our sensory perception of the world. The frequency of a wave is the number of whole waves that pass a fixed point in one second, and is the inverse of the wave’s time period. The SI unit for frequency takes its name from German physicist Heinrich Hertz, who proved experimentally James Clerk Maxwell’s theory of the electromagnetic field [1]. One hertz (Hz) is equivalent to one cycle, or oscillation, per second.

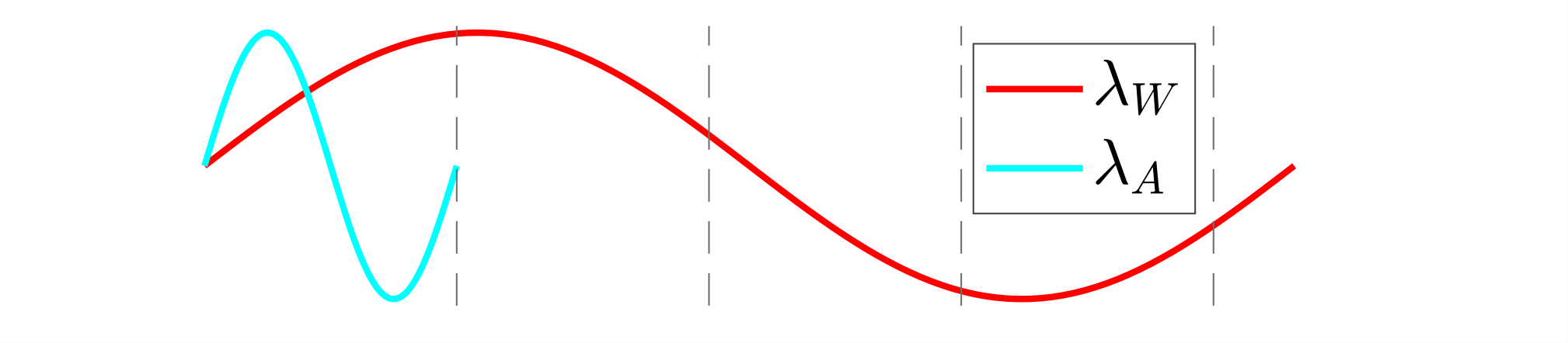

Wave velocity, \(v\), wavelength, \(\lambda\), and frequency \(f\) are connected by the relationship \(v = \lambda f\), from which it follows that for waves travelling at a fixed velocity, frequency and wavelength are inversely proportional.

Wave velocity, \(v\), wavelength, \(\lambda\), and frequency \(f\) are connected by the relationship \(v = \lambda f\), from which it follows that for waves travelling at a fixed velocity, frequency and wavelength are inversely proportional.

|

Frequency and electromagnetic waves

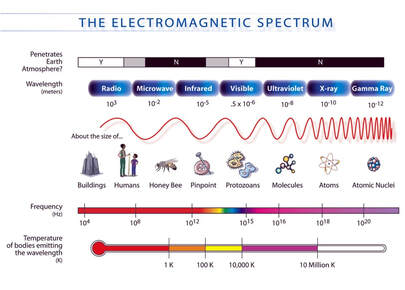

The illustration on the right depicts the electromagnetic (EM) spectrum, from radio waves, with frequencies below about 300 GHz and wavelengths greater than 1mm; to high frequency gamma rays, with frequencies above \(10^{19}\) Hz, and wavelengths on the scale of atomic nuclei. The range of frequencies visible to humans represents only a very narrow slice of the EM spectrum, between \(4 \times10^{14}\) Hz (red) and \(8 \times 10^{14}\) Hz (violet). Other animals are able to see different frequencies, including arctic reindeer, whose vision extends into the ultraviolet, which may enable them to distinguish food such as UV-absorbing lichen in the arctic winter [2]. |

|

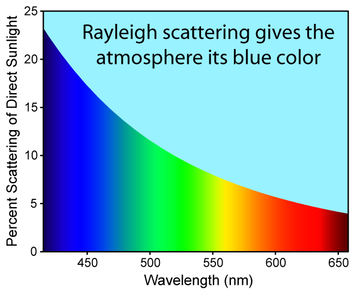

Wave behaviours such as refraction, diffraction and scattering are frequency dependent. The sky gets its blue colour from the frequency dependence of Rayleigh scattering (left), where light is scattered by particles much smaller than the wavelengths of the light, such as molecules in air. The scattering intensity (the amount of light scattered at a given angle) is proportional to the fourth power of the frequency of the light, so blue light is more scattered than red.

Frequency in sound waves

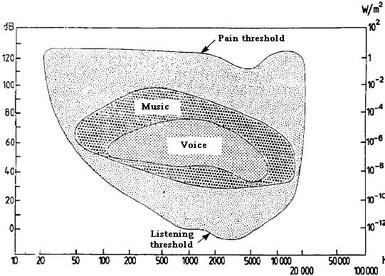

While the range of visible light spans less than one order of magnitude, humans can hear sounds ranging between about 20 Hz and 20 KHz in frequency. This corresponds to wavelengths in air of between 17 mm and 17 m. Beyond the audible range lie infrasonic and |

ultrasonic frequencies. Some animals such as elephants and certain marine mammals are able to communicate over large distances using infrasound, and the uses of ultrasound in imaging are well known.

The frequency of a sound determines its pitch: High pitched sounds have high frequencies, and low-pitched sounds have low frequencies. However human sensitivity to sound is not uniform across all audible frequencies. In the image below, it can be seen that the hearing threshold (in decibels) for low frequency sound is higher than for high frequencies, although the pain threshold is relatively uniform across the audible range.

|

The relationship between frequency and pitch is perhaps most apparent in music. Most musical scales are based on the octave, a musical interval where the frequency of the higher note is twice that of the lower. Certain musical frequencies sound harmonious, or consonant when played together, while others sound discordant, or dissonant. Musical notes have overtones: vibrational frequencies that are integer multiples of the lowest (fundamental) frequency. Pleasant sounding combinations of notes tend to have overtones that are whole-number multiples of each other, while dissonant combinations are more irregular [3].

Resonant frequencies Musical instruments rely on resonance to amplify sound and produce specific notes. All objects have resonant, or |

natural frequencies at which they tend to vibrate. When an object is subject to a driving force at its resonant frequency it will vibrate strongly, and a large amplitude oscillation can then result from a small driving force. In wind instruments, the resonant frequency is altered by varying the length of a vibrating air column, while in stringed instruments, the musician alters the length and tension of the strings. A visualisation of resonant frequencies is provided by the Chladni plate in the video below. A metal plate is sprinkled with sand and connected to a vibration generator. When the vibrational frequency matches one of the resonant modes of the plate, two-dimensional standing wave patterns form. The sand is shaken off

|

|

the antinodes, where the vibrations are strongest, and settles in the nodes where the vibrations cancel. At high frequencies, the patterns become more intricate. 18th century mathematician Sophie Germain’s work on elasticity contains a mathematical treatment of the Chladni plate. You can read about Germain in our blog.

Frequency is central to the research in the Mathematics of Waves and Materials group. Examples featured in our blog include the mathematical modelling of phononic crystals with frequency band gaps that enable the selective elimination of unwanted vibrational frequencies, and the design and realisation of metamaterials for the reduction of low frequency noise. |

[1] Mulligan, J. F. (1989) Heinrich Hertz and the Development of Physics, Physics Today 42 (3), 50

[2] Hogg, C., Neveu, M., Stokkan, K.-A., Folkow, L., Cottrill, P., Douglas, R., Hunt, D. M., and Jeffery, G. (2011) Arctic reindeer extend their visual range into the ultraviolet, Journal of Experimental Biology, 214, 2014-2019

[3] Ball, P. (2012) Why Dissonant Music Strikes the Wrong Chord in the Brain, Nature (London): n. pag. Web

[2] Hogg, C., Neveu, M., Stokkan, K.-A., Folkow, L., Cottrill, P., Douglas, R., Hunt, D. M., and Jeffery, G. (2011) Arctic reindeer extend their visual range into the ultraviolet, Journal of Experimental Biology, 214, 2014-2019

[3] Ball, P. (2012) Why Dissonant Music Strikes the Wrong Chord in the Brain, Nature (London): n. pag. Web

G is for Ground Cloak

Over the past two decades, transformation-based techniques have enabled researchers to develop a range of engineered materials that can manipulate and control physical fields in new and exciting ways. We refer to these engineered materials as metamaterials where "meta" is a Greek word meaning beyond - i.e. a material beyond that of the natural world.

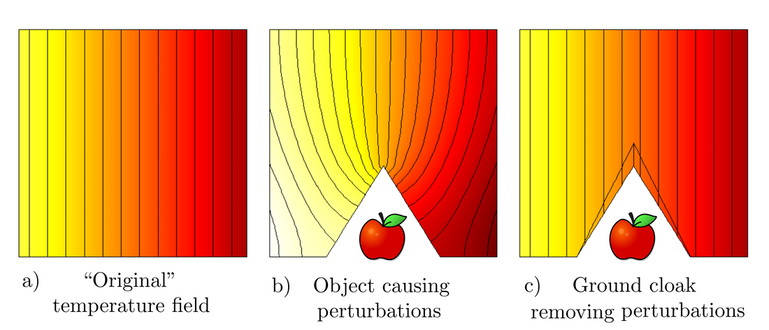

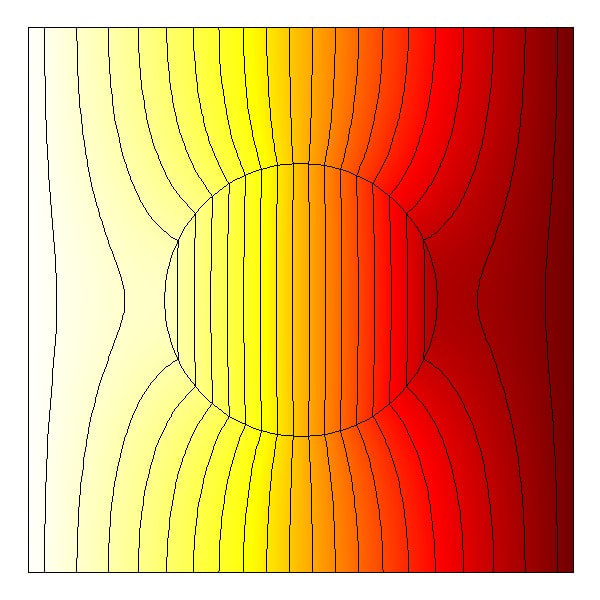

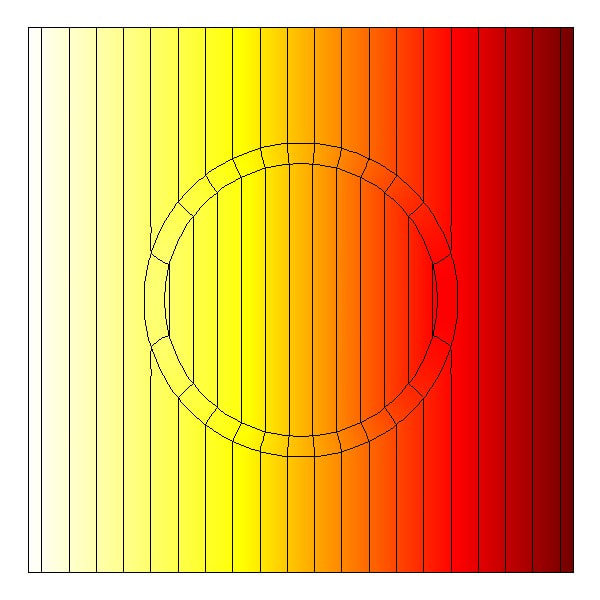

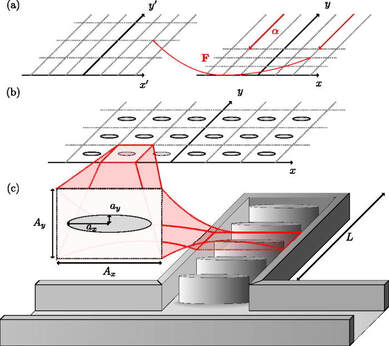

A particularly interesting example of a metamaterial is that of a cloaking device where a physical field is directed around a protected region without affecting the external field. As a result, any object placed inside the protected region is undetected! For example, we can protect and conceal an object that lies on a surface by covering it with the appropriate ground cloak, also referred to as a carpet cloak. A ground cloak is applicable to many physical systems, for example, a two-dimensional thermal ground cloak is simulated in Figure 1c.

A particularly interesting example of a metamaterial is that of a cloaking device where a physical field is directed around a protected region without affecting the external field. As a result, any object placed inside the protected region is undetected! For example, we can protect and conceal an object that lies on a surface by covering it with the appropriate ground cloak, also referred to as a carpet cloak. A ground cloak is applicable to many physical systems, for example, a two-dimensional thermal ground cloak is simulated in Figure 1c.

Observe that, when part of the material domain is removed (or replaced by an object with different physical properties) unwanted perturbations form in the temperature field. A perturbation is any deviation of a field from its original state. However, in the final simulation we integrate a ground cloak into the material domain such that the perturbations are removed and the temperature field returns to its original state, as desired.

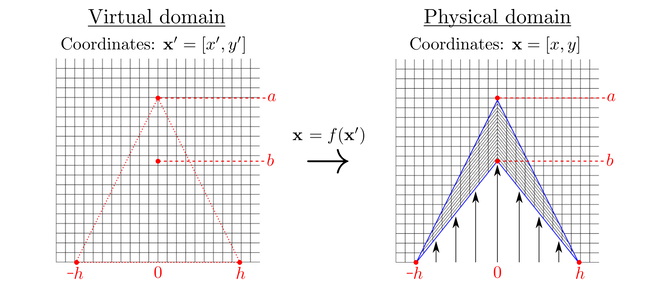

In order to achieve the desired effects we need the ground cloak to have specific physical properties. We can determine the physical properties of a ground cloak (and other metamaterials) through a process called transformation theory where we consider both a virtual and physical domain. The idea is as follows:

In order to achieve the desired effects we need the ground cloak to have specific physical properties. We can determine the physical properties of a ground cloak (and other metamaterials) through a process called transformation theory where we consider both a virtual and physical domain. The idea is as follows:

- Start with a virtual domain where the physical properties and physical fields are known.

- Define the region where the ground cloak (or an alternative metamaterial) is to be integrated.

- Apply a coordinate transformation to achieve the desired deformation in the physical domain.

- Calculate the physical properties of the metamaterial that will achieve the desired effects.

The appropriate ground cloak transformation is illustrated in Figure 2, where we wish to squeeze the red triangular region (in the virtual domain) into the blue arrowhead region (in the physical domain). The mapping is given by:

\begin{equation}

\begin{split}

x&=x' \\

y &= \dfrac{a-b}{a} y' + \dfrac{h - |x'|}{h}b

\end{split}

\end{equation}

Using this mapping we can calculate the physical properties of the ground cloak, however, the main difficulty encountered is that the calculated properties tend to be quite complex. Such designs are difficult to create with conventional materials and this is why we turn to engineered metamaterials where we can compose an artificial structure to account for spatial and directional dependencies.

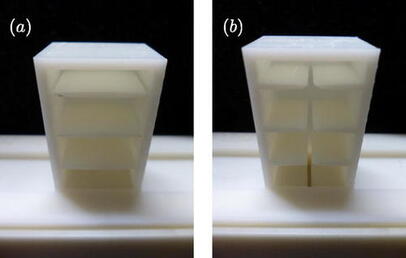

As a result, three-dimensional ground cloaks have been realised for many physical systems, for example; a thermal ground cloak that controls a heat flux [1]; an acoustic ground cloak that controls sound waves [2]; and an optical ground cloak that controls electromagnetic waves at microwave frequencies [3].

\begin{equation}

\begin{split}

x&=x' \\

y &= \dfrac{a-b}{a} y' + \dfrac{h - |x'|}{h}b

\end{split}

\end{equation}

Using this mapping we can calculate the physical properties of the ground cloak, however, the main difficulty encountered is that the calculated properties tend to be quite complex. Such designs are difficult to create with conventional materials and this is why we turn to engineered metamaterials where we can compose an artificial structure to account for spatial and directional dependencies.

As a result, three-dimensional ground cloaks have been realised for many physical systems, for example; a thermal ground cloak that controls a heat flux [1]; an acoustic ground cloak that controls sound waves [2]; and an optical ground cloak that controls electromagnetic waves at microwave frequencies [3].

E. Russell

[1] Yang, T., Wu, Q., Xu, W., Liu. D., Huang, L., Chen, F. (2016) A thermal ground cloak, Phys. Lett. A, 380 (7-8), 965-969.

[2] Zigoneanu, L., Popa, BI. & Cummer, S. (2014), Three-dimensional broadband omnidirectional acoustic ground cloak. Nature Mater, 13, 352–355.

[3] Ma, H., Cui, T. (2010) Three-dimensional broadband ground-plane cloak made of metamaterials, Nat Commun 1, 21.

[2] Zigoneanu, L., Popa, BI. & Cummer, S. (2014), Three-dimensional broadband omnidirectional acoustic ground cloak. Nature Mater, 13, 352–355.

[3] Ma, H., Cui, T. (2010) Three-dimensional broadband ground-plane cloak made of metamaterials, Nat Commun 1, 21.

H is for Helmholtz

|

Hermann von Helmholtz was a German physicist and scientific philosopher, who made significant contributions to several areas of science. Helmholtz was born it what was then Prussia, now Germany, in 1821. It would be thought that due to his significant contributions to the mathematical world, Helmholtz had studied mathematics as a student. However, due to financial pressures Helmholtz studied medicine, and taught himself mathematics and philosophy in his spare time [1]. Helmholtz went on to have an illustrious academic career in physics and philosophy, publishing many ground breaking papers and supervising famous students such as Max Planck and Heinrich Hertz, who went on to make significant contributions to science themselves.

You may be wondering why Hermann von Helmholtz has made this A-Z list. Well, Helmholtz performed research into wave sciences such as acoustics and electromagnetism, and his discoveries in these areas have led to some mathematical theories and engineering designs becoming his namesake. |

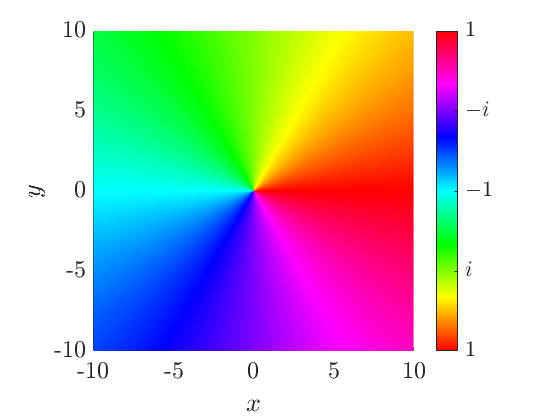

Helmholtz performed experiments looking at electric currents, and concluded that the electronic oscillations were periodic in time. This observation can be generalised to say that any linear wave field periodic in time. This means, that if we were to consider a wave field in space and time, \( \phi (\mathbf{x},t)\), this can be written in terms of a time dependent periodic function

\begin{equation}

\phi(\mathbf{x},t) = \tilde {\phi}(\mathbf{x})\exp\{i 2\pi f t\}\tag{1},

\end{equation}

where \(f\) is the frequency of the wave. This solution form allows us to rewrite the wave equation in a simpler form

\begin{equation}

\nabla^{2}\phi - \frac{1}{c_{0}^{2}}\frac{\partial^{2}\phi}{\partial t^{2}} = 0 \implies \nabla^{2}\tilde{\phi} + \frac{\omega^{2}}{c_{0}^{2}}\tilde{\phi} = 0\tag{2},

\end{equation}

where \(c_{0}^{2}\)is the speed of sound and \( \omega = 2\pi f\). The resulting equation is known as the Helmholtz equation.

\begin{equation}

\phi(\mathbf{x},t) = \tilde {\phi}(\mathbf{x})\exp\{i 2\pi f t\}\tag{1},

\end{equation}

where \(f\) is the frequency of the wave. This solution form allows us to rewrite the wave equation in a simpler form

\begin{equation}

\nabla^{2}\phi - \frac{1}{c_{0}^{2}}\frac{\partial^{2}\phi}{\partial t^{2}} = 0 \implies \nabla^{2}\tilde{\phi} + \frac{\omega^{2}}{c_{0}^{2}}\tilde{\phi} = 0\tag{2},

\end{equation}

where \(c_{0}^{2}\)is the speed of sound and \( \omega = 2\pi f\). The resulting equation is known as the Helmholtz equation.

|

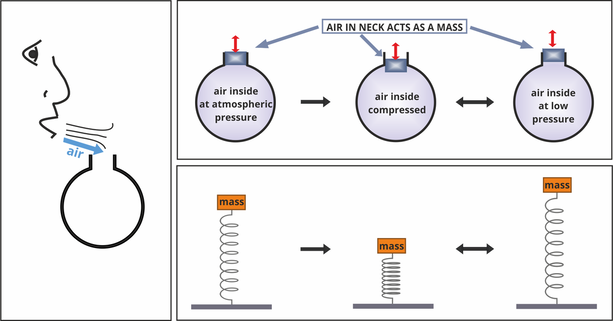

Helmholtz also performed research into acoustics, and performed experiments looking at acoustic resonators. A certain type of resonator, now known as a Helmholtz resonator was of particular interest to him. Helmholtz resonator describes a general theory for a resonator which had a long thin neck with a large cavity behind. At certain frequencies, depending on the dimensions of the resonator, resonance occurs. Two types of resonance can occur. The first kind can be seen by blowing across the top of a bottle, and when the air inside the bottle resonates, a louder sound is produced. This happens as cyclic fluctuations in the neck cause the pressure in the bottle to rise. The pressure is then released with the momentum carrying the air out, causing a pressure deficit inside the bottle - causing a rise in pressure and so on. These fast moving pressure oscillations generate noise. The second kind of resonance can be used to dampen sound. The air in the back of the cavity acts as a spring, as energy is lost in the thin neck due to viscous losses - the relationship between the neck and cavity dimensions determines the resonant frequency. These absorbing resonators are used to line jet engines to attenuate unwanted noise [2].

|

T. White

[1] Turner, R. S. (2008) Helmholtz, Hermann von, in Complete Dictionary of Scientific Biography, vol. 6, New York, NY: Charles Scribner's Sons, 241-253.

[2] Rienstra, SW & Hirschberg, A (2004) An introduction to acoustics. Technische Universiteit Eindhoven, Eindhoven.

[2] Rienstra, SW & Hirschberg, A (2004) An introduction to acoustics. Technische Universiteit Eindhoven, Eindhoven.

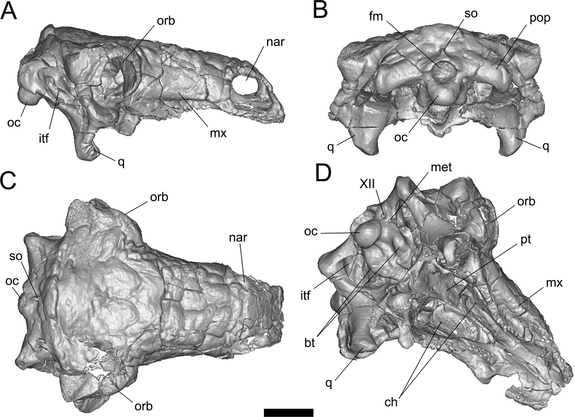

I is for inclusions

|

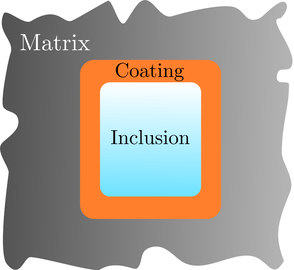

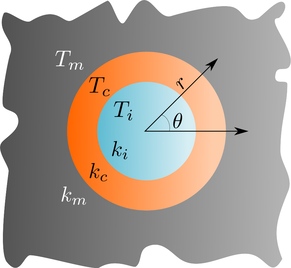

Inclusions are disjoint components within a multiphase material which have significantly different physical properties to those of the interstitial matrix phase. Usually, inclusions collectively make up a minority of the overall material's volume; often the symbol \(\phi\) or \(c\) is used to denote the volume fraction of the inclusion phase, the latter in analogy with concentration in chemistry. The purpose of the inclusion phase in material design is typically to enhance physical performance in a particular context, while adhering to practical constraints, for example, to increase the compressive or tensile strength while reducing the density. In this sense, it is possible to combine one or more types of inclusion within the matrix phase to create a hybrid composite material with improved functionality overall, compared to its individual ingredients.

The word "inclusion" suggests that a deliberate choice has been made to artificially include additional objects within the matrix material, but inclusions can also be unintentional, for |

example impurities introduced in a manufacturing process. Inclusions also occur in natural materials, e.g. fossils, and flaws in diamonds. Inclusions in manufactured composite materials are typically solid or hollow particles, rods or fibres, or air bubbles, depending on the intended applications of the material. Note that each inclusion may itself be a multiphase object, for example a hollow glass sphere has an outer glass phase and an inner air phase. In general, symmetric shapes are preferred, particularly spherical or cylindrical inclusions, in order that the benefits are reasonably predictable and reliable over different samples of the same composite. Materials with symmetric and well-dispersed inclusions are also more amenable to study by mathematical modelling and computer simulation. Below we consider briefly the case of hollow spherical inclusions, assumed to be isolated from each other, under small strains.

|

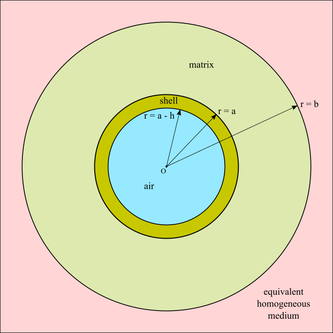

A versatile mathematical model which represents the influence of spherical or cylindrical inclusions on a material's elastic properties is the Generalized Self-Consistent Method (Christensen & Lo, 1979), in which the model domain is composed of four phases as shown in figure 2. A single hollow inclusion is composed of an inner air (or void) phase, \(0 \leq r \leq a - h\), and an outer solid shell phase, \(a - h \leq r \leq a\). Solid inclusions may be considered as the special case \(h \to a\). The inclusion is surrounded by matrix material occupying \(a \leq r \leq b\), where for consistency we impose that \(a^3 / b^3 = c\), where \(c\) is the global volume fraction of inclusions. In this sense each inclusion is embedded within its own "share" of the matrix material, in the model. The region \(r \geq b\) is referred to as the equivalent homogeneous medium, and it is this phase which ultimately provides the effective properties

|

of the overall composite material, after the equations of linear elasticity have been solved in each phase subject to an applied far-field displacement and a strain energy equivalence condition, with appropriate boundary conditions at the interfaces \(r = a - h\), \(r = a\), and \(r = b\). Note that, in this model, the problem becomes dimensionless and the solutions can be expressed in terms of the inclusion's aspect ratio \(\eta = 1 - h/a\). Therefore, inclusions of different diameters but the same value of \(\eta\) have the same solution.

The method described above can be extended to account for materials containing multiple types of inclusion, with different diameters and shell stiffness properties for example, by volume averaging over the equivalent medium relating to each type (Bardella & Genna, 2001). Although the equations are algebraically cumbersome, in most applications a majority of the parameters can be considered as fixed, and the Young's modulus of the composite material can be computed as a function of the remaining free parameters. In the polydisperse case, it is important to have an accurate characterization of the inclusion phase via experimental imaging, although often a good approximation can be obtained via the volume-weighted mean diameter of the inclusions.

N. F. Morrison

[1] Bardella, L. & Genna, F. (2001) On the elastic behavior of syntactic foams, International Journal of Solids and Structures, 38, 7235-7260.

[2] Christensen, R. M. & Lo, K. H (1979) Solutions for effective shear properties in three phase sphere and cylinder models, Journal of the Mechanics and Physics of Solids, 27, 315-330.

[2] Christensen, R. M. & Lo, K. H (1979) Solutions for effective shear properties in three phase sphere and cylinder models, Journal of the Mechanics and Physics of Solids, 27, 315-330.

J is for Jacobian

Both linear and nonlinear multivariable functions can be thought of as transformations of space. The Jacobian of a function, named after the German mathematician Carl Jacobi, is a measure of how much the function stretches (or compresses) the original space. In particular, when making a change of variables, the Jacobian arises as a multiplicative factor within an integral to accommodate for the change of coordinates.

The Jacobian of a multivariable function \({f} : \mathbb{R}^n \rightarrow \mathbb{R}^m\), denoted by \(J\), is defined to be the determinant of the \(m \times n\) matrix, \(\mathbf{J}\), given by

\begin{equation}

\mathbf{J} = \left[ \begin{matrix} \dfrac{\partial f_1}{\partial x_1} & \cdots & \dfrac{\partial f_1}{\partial x_n} \\

\vdots & \ddots & \vdots \\ \dfrac{\partial f_m}{\partial x_1} & \cdots & \dfrac{\partial f_m}{\partial x_n}

\end{matrix} \right]\tag{1}

\end{equation}

This matrix represents what \(\boldsymbol{f}(\boldsymbol{x})\) looks like locally, as a linear transformation. We refer to \(\mathbf{J}\) as the Jacobian matrix of \(\boldsymbol{f}(\boldsymbol{x})\).

The Jacobian of a multivariable function \({f} : \mathbb{R}^n \rightarrow \mathbb{R}^m\), denoted by \(J\), is defined to be the determinant of the \(m \times n\) matrix, \(\mathbf{J}\), given by

\begin{equation}

\mathbf{J} = \left[ \begin{matrix} \dfrac{\partial f_1}{\partial x_1} & \cdots & \dfrac{\partial f_1}{\partial x_n} \\

\vdots & \ddots & \vdots \\ \dfrac{\partial f_m}{\partial x_1} & \cdots & \dfrac{\partial f_m}{\partial x_n}

\end{matrix} \right]\tag{1}

\end{equation}

This matrix represents what \(\boldsymbol{f}(\boldsymbol{x})\) looks like locally, as a linear transformation. We refer to \(\mathbf{J}\) as the Jacobian matrix of \(\boldsymbol{f}(\boldsymbol{x})\).

Linear Transformations

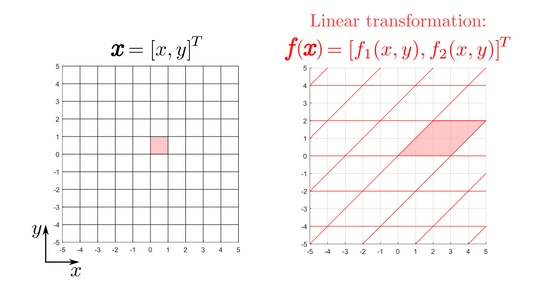

Linear transformations of space are unique in that they can be represented by matrices with scalar entries. For example, consider the linear function \(\boldsymbol{f} : \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

\begin{equation}

\boldsymbol{f}(\boldsymbol{x}) = \left[ \begin{matrix} f_1(x,y) \\ f_2(x,y) \end{matrix} \right] = \left[ \begin{matrix} 3x + 2y \\ 2y \end{matrix} \right] = \left[ \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right] \boldsymbol{x}\tag{2}

\end{equation}

where the \(2 \times 2\) matrix on the right-hand side of \((2)\) represents the linear transformation in matrix form.

Geometrically, the result of a linear transformation is that the grid lines remain parallel and evenly spaced. As a result, each unit square is mapped onto a parallelogram. For example, Figure 1 shows how \(\boldsymbol{f}(\boldsymbol{x})\) affects the grid lines of two-dimensional Euclidean space.

Linear transformations of space are unique in that they can be represented by matrices with scalar entries. For example, consider the linear function \(\boldsymbol{f} : \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

\begin{equation}

\boldsymbol{f}(\boldsymbol{x}) = \left[ \begin{matrix} f_1(x,y) \\ f_2(x,y) \end{matrix} \right] = \left[ \begin{matrix} 3x + 2y \\ 2y \end{matrix} \right] = \left[ \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right] \boldsymbol{x}\tag{2}

\end{equation}

where the \(2 \times 2\) matrix on the right-hand side of \((2)\) represents the linear transformation in matrix form.

Geometrically, the result of a linear transformation is that the grid lines remain parallel and evenly spaced. As a result, each unit square is mapped onto a parallelogram. For example, Figure 1 shows how \(\boldsymbol{f}(\boldsymbol{x})\) affects the grid lines of two-dimensional Euclidean space.

Note that, the transformed basis vector in the \(x\)-direction corresponds to the first column of the scalar matrix since

\begin{equation}

\boldsymbol{f}\left(\left[ \begin{matrix} 1 \\ 0 \end{matrix} \right]\right) = \left[ \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right]\left[ \begin{matrix} 1 \\ 0 \end{matrix} \right] = \left[ \begin{matrix} 3 \\ 0 \end{matrix} \right]\tag{3}

\end{equation}

Similarly, the second column of the matrix corresponds to the transformed basis vector in the \(y\)-direction. These transformed basis vectors represent the edges of the parallelogram in transformed space. Therefore, the area of the red parallelogram in Figure 1 is given by the determinant of the scalar matrix, i.e.

\begin{equation}

\begin{matrix}

\text{area of} \\ \text{parallelogram}

\end{matrix} \ = \left| \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right| = (3 \times 2) - (0 \times 2) = 6 \ \text{unit squares}\tag{4}

\end{equation}

In other words, the linear transformation in \((2)\) stretches any predefined area of the original space by a factor of 6.

This analogy is the motivation behind the Jacobian since, for linear transformations, finding the area of the relevant parallelogram is equivalent to finding the Jacobian. This is supported by the fact that, for linear transformations, the scalar matrix that represents the transformation is equivalent to its Jacobian matrix.

\begin{equation}

\boldsymbol{f}\left(\left[ \begin{matrix} 1 \\ 0 \end{matrix} \right]\right) = \left[ \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right]\left[ \begin{matrix} 1 \\ 0 \end{matrix} \right] = \left[ \begin{matrix} 3 \\ 0 \end{matrix} \right]\tag{3}

\end{equation}

Similarly, the second column of the matrix corresponds to the transformed basis vector in the \(y\)-direction. These transformed basis vectors represent the edges of the parallelogram in transformed space. Therefore, the area of the red parallelogram in Figure 1 is given by the determinant of the scalar matrix, i.e.

\begin{equation}

\begin{matrix}

\text{area of} \\ \text{parallelogram}

\end{matrix} \ = \left| \begin{matrix} 3 & 2 \\ 0 & 2 \end{matrix} \right| = (3 \times 2) - (0 \times 2) = 6 \ \text{unit squares}\tag{4}

\end{equation}

In other words, the linear transformation in \((2)\) stretches any predefined area of the original space by a factor of 6.

This analogy is the motivation behind the Jacobian since, for linear transformations, finding the area of the relevant parallelogram is equivalent to finding the Jacobian. This is supported by the fact that, for linear transformations, the scalar matrix that represents the transformation is equivalent to its Jacobian matrix.

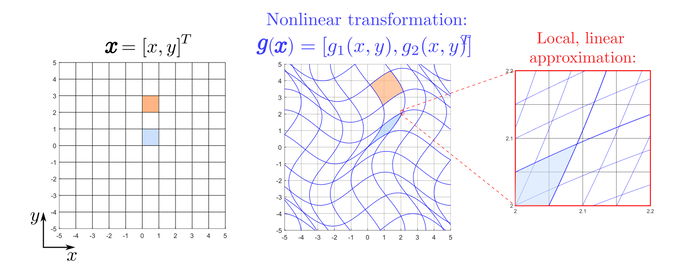

Nonlinear Transformations

Nonlinear transformations lead to grid lines that are no longer parallel and evenly spaced. As a result, the transformation of each unit square depends on its location, i.e. the Jacobian changes. This can be seen in Figure 2, where we consider the nonlinear function \(\boldsymbol{g} : \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

\begin{equation}

\boldsymbol{g}(\boldsymbol{x}) = \left[ \begin{matrix} g_1(x,y) \\ g_2(x,y) \end{matrix} \right] = \left[ \begin{matrix} 1.25x + \sin(y) \\ \sin(x) + 1.25y \end{matrix} \right]

\tag{5}

\end{equation}

We see that the transformation compresses the blue unit square \((J<1)\) whereas the orange unit square is stretched \((J>1)\).

Nonlinear transformations lead to grid lines that are no longer parallel and evenly spaced. As a result, the transformation of each unit square depends on its location, i.e. the Jacobian changes. This can be seen in Figure 2, where we consider the nonlinear function \(\boldsymbol{g} : \mathbb{R}^2 \rightarrow \mathbb{R}^2\) given by

\begin{equation}

\boldsymbol{g}(\boldsymbol{x}) = \left[ \begin{matrix} g_1(x,y) \\ g_2(x,y) \end{matrix} \right] = \left[ \begin{matrix} 1.25x + \sin(y) \\ \sin(x) + 1.25y \end{matrix} \right]

\tag{5}

\end{equation}

We see that the transformation compresses the blue unit square \((J<1)\) whereas the orange unit square is stretched \((J>1)\).

As we zoom in on a small region of the transformed space, the grid lines start to look a lot more like a linear function. We use the Jacobian matrix of \(\boldsymbol{g}(\boldsymbol{x})\), given by

\begin{equation}

\mathbf{J} = \left[ \begin{matrix} \dfrac{\partial g_1}{\partial x_1} & \dfrac{\partial g_1}{\partial x_2} \\ \dfrac{\partial g_2}{\partial x_1} & \dfrac{\partial g_2}{\partial x_2} \end{matrix} \right] = \left[ \begin{matrix} 1.25 & \cos(y) \\ \cos(x) & 1.25 \end{matrix} \right]\tag{6}

\end{equation}

to represent what the transformation looks like locally, as a linear transformation. For example, in Figure 2 we have zoomed in on the top right corner of the transformed blue unit square. In the original space, this point is given by \([1,1]^T\). Therefore, the Jacobian of \(\boldsymbol{g}(\boldsymbol{x})\) around this point is approximately

\begin{equation}

J = \left| \begin{matrix} 1.25 & \cos(1) \\ \cos(1) & 1.25 \end{matrix} \right| \approx (1.25 \times 1.25) - (0.54 \times 0.54) \approx 1.27\tag{7}

\end{equation}

In other words, the nonlinear transformation in \((5)\) tends to stretch areas around the point \([1,1]^T\) by a factor of approximately 1.27. Therefore, for nonlinear transformations, we can think of the Jacobian as giving the best linear approximation of the distorted parallelogram near a given point.

\begin{equation}

\mathbf{J} = \left[ \begin{matrix} \dfrac{\partial g_1}{\partial x_1} & \dfrac{\partial g_1}{\partial x_2} \\ \dfrac{\partial g_2}{\partial x_1} & \dfrac{\partial g_2}{\partial x_2} \end{matrix} \right] = \left[ \begin{matrix} 1.25 & \cos(y) \\ \cos(x) & 1.25 \end{matrix} \right]\tag{6}

\end{equation}

to represent what the transformation looks like locally, as a linear transformation. For example, in Figure 2 we have zoomed in on the top right corner of the transformed blue unit square. In the original space, this point is given by \([1,1]^T\). Therefore, the Jacobian of \(\boldsymbol{g}(\boldsymbol{x})\) around this point is approximately

\begin{equation}

J = \left| \begin{matrix} 1.25 & \cos(1) \\ \cos(1) & 1.25 \end{matrix} \right| \approx (1.25 \times 1.25) - (0.54 \times 0.54) \approx 1.27\tag{7}

\end{equation}

In other words, the nonlinear transformation in \((5)\) tends to stretch areas around the point \([1,1]^T\) by a factor of approximately 1.27. Therefore, for nonlinear transformations, we can think of the Jacobian as giving the best linear approximation of the distorted parallelogram near a given point.

E. Russell

K is for Kelvin

William Thomson was a British mathematician and engineer born in Belfast. Thomson was awarded the title of Lord Kelvin, after a river which runs through Glasgow University, where he served as a professor for 52 years. Kelvin was a highly decorated mathematician, serving as the President of the Royal Society from 1890-1895.

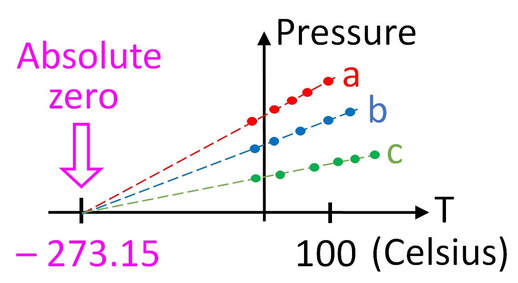

Many mathematical and physical phenomena have been named after Kelvin in the years since his death. Most notably, the SI Unit for temperature is the kelvin. The kelvin measures temperature on the same scale as degrees Celsius, however the 'zero' has been shifted. For Celsius, we know that 0\(^{\circ}\)C is the freezing point of water. On the other hand, kelvin are measured such that 0 K is defined as absolute zero - the temperature at which particles achieve a minimum internal energy [1].

Many mathematical and physical phenomena have been named after Kelvin in the years since his death. Most notably, the SI Unit for temperature is the kelvin. The kelvin measures temperature on the same scale as degrees Celsius, however the 'zero' has been shifted. For Celsius, we know that 0\(^{\circ}\)C is the freezing point of water. On the other hand, kelvin are measured such that 0 K is defined as absolute zero - the temperature at which particles achieve a minimum internal energy [1].

|

The concept of absolute zero had been discussed before Kelvin, but a value had never been assigned. Kelvin considered the ideal gas law \(PV = nRT\), where \(P\) is a pressure, \(V\) is volume, \(n\) is the amount of a substance, \(R\) is the ideal gas constant and \(T\) is temperature. By considering the pressure in an ideal gas at ambient temperatures in Celsius - Kelvin exploited the linear relationship between \(P\) and \(T\) and, by tracing lines to find the point that \(P = 0\), discovered that the absolute zero temperature is -273.15\(^{\circ}\)C which is how 0 K is defined. Figure 1 shows how this tracing process can be done and how, for 3 different samples, the absolute zero temperature remains the same.

|

Another mathematical problem studied by Kelvin, as well as a previous A-Z entry - Hermann von Helmholtz, is that of Kelvin-Helmholtz instabilities. These specific instabilities are seen when there is a velocity difference across the interface between two fluids. The interface between the two fluids is pulled up and spirals, as can be seen in Figure 2, where wave-like

|

structures have formed in the clouds. Kelvin-Helmholtz instabilities can be seen in the atmosphere and ocean waves as well as in the atmospheres of other planets [2].

Helmholtz was the first to recognise that instabilities can form as a result of shear flow, but it was Kelvin who first posed and fully solved the problem of a linear instability of a vortex sheet between two layers of fluid with different densities. The instabilities form as the kinetic energy from the relative movement of the two layers is converted into potential energy used to raise or lower a fluid, causing the vortical wave shapes to form in the wake of the shear flow [3]. T. White

|

[1] Erlichson, H. (2001) Kelvin and the absolute temperature scale, Eur. J. Phys, 22(4), 325.

[2] Vujinovic, A. and Rakovec, J. (2015) Kelvin-Helmholtz Instability, Univerza v Ljubljani Fakulteta za Matematiko in Fiziko.

[3] Drazin, P.G (1970) Kelvin–Helmholtz instability of finite amplitude, J. Fluid Mech, 42(2), 321-335.

[2] Vujinovic, A. and Rakovec, J. (2015) Kelvin-Helmholtz Instability, Univerza v Ljubljani Fakulteta za Matematiko in Fiziko.

[3] Drazin, P.G (1970) Kelvin–Helmholtz instability of finite amplitude, J. Fluid Mech, 42(2), 321-335.

L is for Lamb Waves

Sir Horace Lamb, FRS (1849-1934) was a British applied mathematician, responsible for ground-breaking research work in the fields of fluid and solid mechanics. His outstanding contributions extend to other fields such as electromagnetics, and more generally outside academic research, the education system at both national and institutional levels [1]. Lamb was also an excellent lecturer and writer and some of his original books still remain very relevant and widely used today, as is the the case with Hydrodynamics, which was originally published in 1895.

Lamb has a particularly strong connection with Manchester since he was born in Stockport, and also the University of Manchester (when he arrived it was still Owens College) where he was a professor for over three decades and held the Beyer Chair of Applied Mathematics. During his time at Manchester he published much of his original work and interacted with other great scientists such as the great fluid dynamicist Osborne Reynolds.

It is worth mentioning that following Lamb, the Beyer Chair has been held by many great mathematicians including Sir James Lighthill and more recently one of MWM's founders, Professor Ian David Abrahams. Furthermore, there is a chair named after Lamb in Manchester, and some of his original furniture is still in the Mathematics department. The Sir Horace Lamb Chair remains occupied by its first holder, Professor Oliver Jensen.

One of the problems that attracted the attention of Lamb was understanding the different modes of vibration of an (infinite) elastic plate, which back then was already of practical interest to the seismology community.

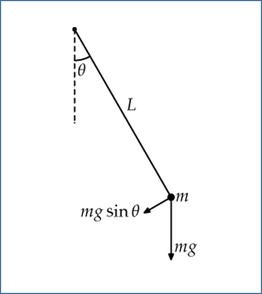

The strategy was to look for travelling wave solutions of the equations of linear elasticity (see E is for Elasticity, above), together with a traction-free boundary condition (see B is for Boundary Conditions) on the top and bottom of the plate (the other two spatial dimensions are assumed to be infinite). The problem reduces to finding solutions to particular types of dispersion equations, which relate a given wave's frequency (see F is for Frequency) to its wavenumber. These equations are of paramount importance in the study of wave physics since they encompass all of the information to understand the wave motion in a particular system.

Lamb has a particularly strong connection with Manchester since he was born in Stockport, and also the University of Manchester (when he arrived it was still Owens College) where he was a professor for over three decades and held the Beyer Chair of Applied Mathematics. During his time at Manchester he published much of his original work and interacted with other great scientists such as the great fluid dynamicist Osborne Reynolds.

It is worth mentioning that following Lamb, the Beyer Chair has been held by many great mathematicians including Sir James Lighthill and more recently one of MWM's founders, Professor Ian David Abrahams. Furthermore, there is a chair named after Lamb in Manchester, and some of his original furniture is still in the Mathematics department. The Sir Horace Lamb Chair remains occupied by its first holder, Professor Oliver Jensen.

One of the problems that attracted the attention of Lamb was understanding the different modes of vibration of an (infinite) elastic plate, which back then was already of practical interest to the seismology community.

The strategy was to look for travelling wave solutions of the equations of linear elasticity (see E is for Elasticity, above), together with a traction-free boundary condition (see B is for Boundary Conditions) on the top and bottom of the plate (the other two spatial dimensions are assumed to be infinite). The problem reduces to finding solutions to particular types of dispersion equations, which relate a given wave's frequency (see F is for Frequency) to its wavenumber. These equations are of paramount importance in the study of wave physics since they encompass all of the information to understand the wave motion in a particular system.

For an elastic plate of thickness \(d\) with material constants \(\lambda, \mu, \rho\) and angular frequency \(\omega\), the Lamb wave dispersion equations are explicitly given by

\begin{equation}

\frac{\tanh{(\gamma_t d/ 2)}}{\tanh{(\gamma_l d/2)}} = \frac{4 \gamma_l \gamma_t k^2}{(k^2 + \gamma_t^2)^2}, \qquad\textrm{(Symmetric)} \quad\tag{1a}

\end{equation}

\begin{equation}

\frac{\tanh{(\gamma_t d/ 2)}}{\tanh{(\gamma_l d/2)}} = \frac{(k^2 + \gamma_t^2)^2}{4 \gamma_l \gamma_t k^2}, \qquad\textrm{(Anti-symmetric)}\quad\tag{1b}

\end{equation}

with

\begin{equation}

\gamma_l^2 = k^2 - k_l^2 = k^2 -\frac{\rho \omega^2}{\lambda + 2\mu}, \qquad \gamma_t^2 = k^2 - k_t^2 = k^2 - \frac{\rho \omega^2}{\mu} \tag{2}.

\end{equation}

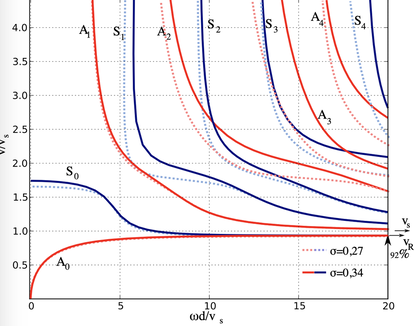

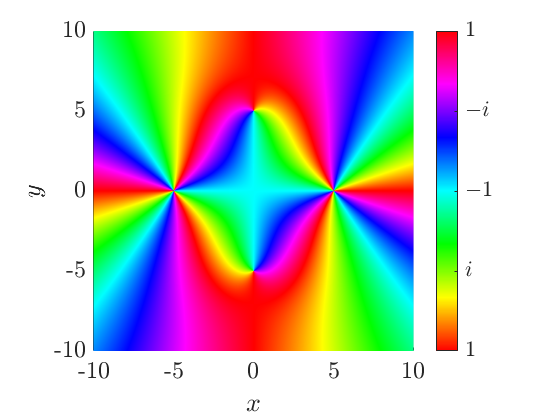

Solutions to (\(1a\)) correspond to symmetric (extensional) modes \(S_n\) and solutions to (\(1b\)) describe anti-symmetric (flexural) modes \(A_n\), see Figure 1. The waves associated with the modes of vibration (\(S_n, A_n\)) are commonly referred to as Lamb Waves. The subscript \(n\) is a positive integer that characterizes a given mode. Except for \(n=0\), the existence of a given mode and its associated velocity is highly dependent on the frequency of excitation (and plate thickness), as illustrated in Figure 2.

Figure 1: A0 (left) and S0 (right) modes propagating in an elastic plate. Animations created by Dr. Noé Jiménez, many thanks for the permission to use these here! For more animations check out his personal website: https://nojigon.webs.upv.es/simulations_waves.php

Figure 2: Some dispersion curves Sn, An, of the Lamb dispersion relation for two distinct material parameters (in terms of Poisson's ratio σ). The vertical axis denotes the wave velocity and the horizontal axis the frequency. Credit: Svebert, CC BY 3.0 https://creativecommons.org/licenses/by/3.0, via Wikimedia Commons

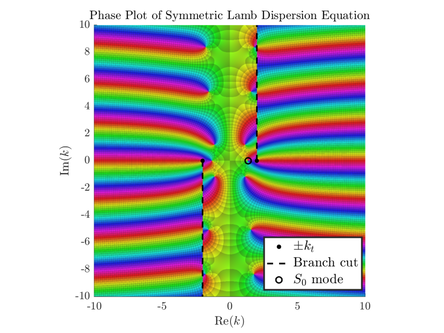

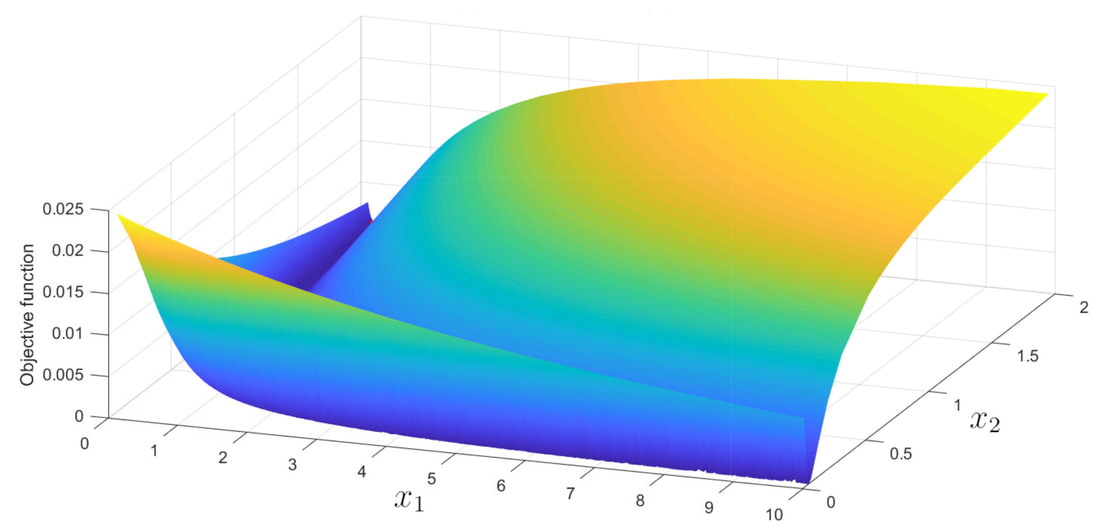

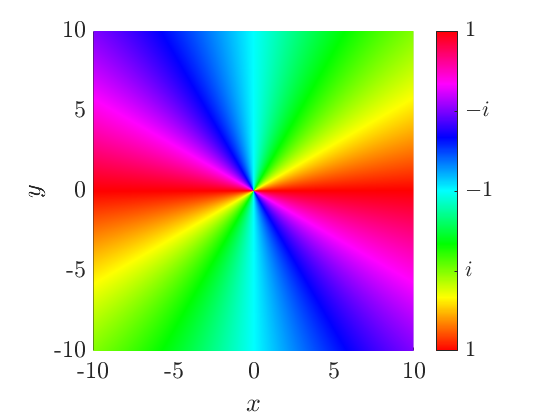

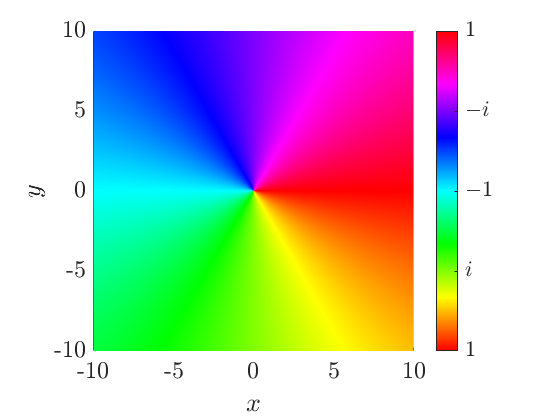

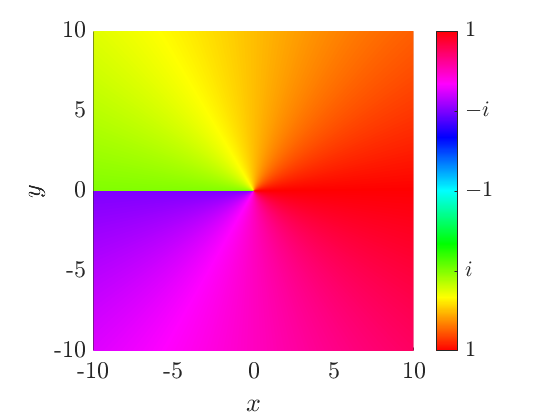

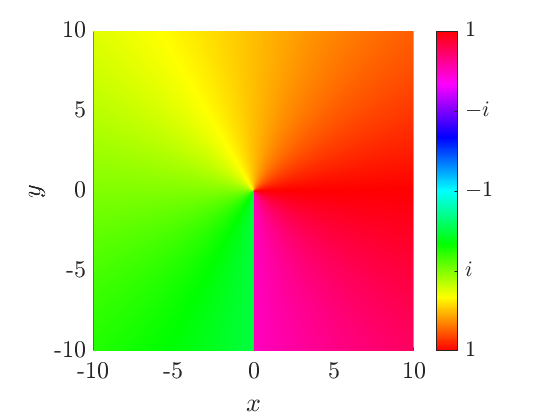

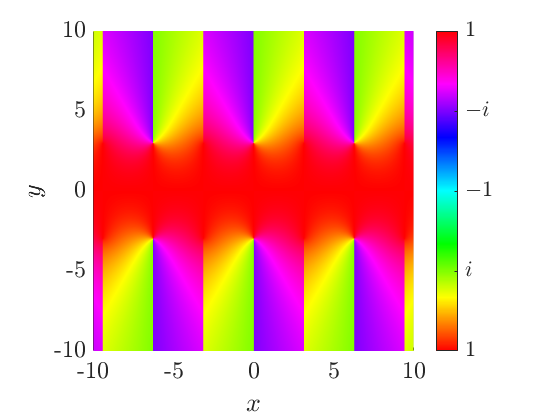

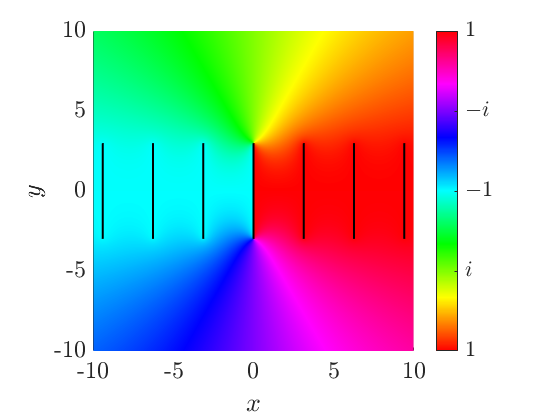

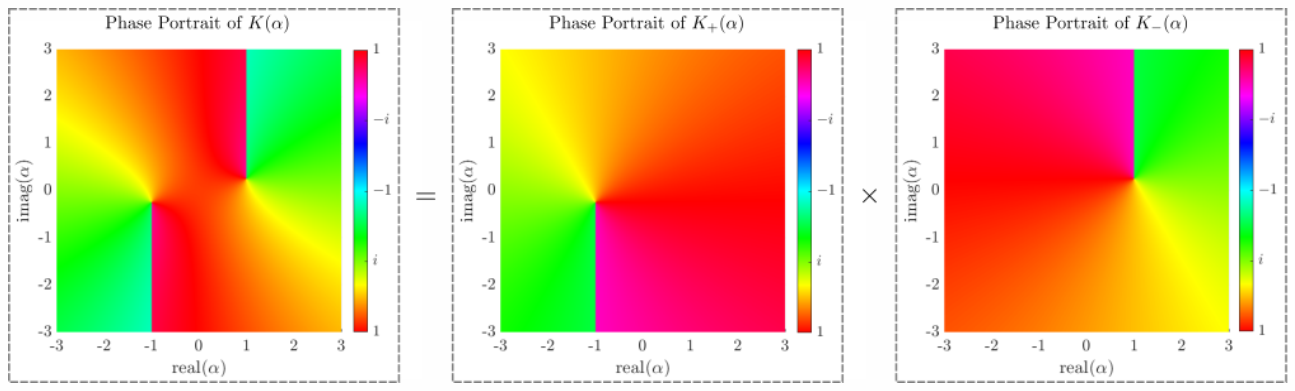

Equations (\(1\)) are highly non-linear equations in the complex plane for the wavenumber \(k\) and as a result, difficult to solve. Particular limits can be taken for useful insights e.g. for short waves compared to the thickness of the plate, (\(1a\)) reduces to the Rayleigh dispersion relation. However, analytical solutions to these equations are in general not readily available and the use of numerical techniques are usually adopted (e.g. Figure 3). It is remarkable to note how much harder Lamb's task was to analyse the main properties of these equations and how clear the original paper [2] reads given his limited computing resources.

Figure 3: A numerical visualization in k-space of the symmetric equation (1a) with \(d\) = 2, \(k_l\) = 1.2 and \(k_t\) = 2. Much easier to identify the roots now than in 1916...

The original paper has currently got over 2000 citations, and more than 80% are from the current century. This is mainly due to the many computational and experimental advances throughout the years, from which many applications of Lamb waves have arisen, particularly in ultrasonic non destructive testing. Lamb waves remain subject of current research and have recently motivated interesting metamaterial structures [3, 4]. Lastly, the study of Lamb guided elastic waves with application to hidden tamper detection was the PhD thesis subject from former MWM member Dr. Robert Davey which can be found in [5].

E. Garcia-Neefjes

[1] Launder, B. (2017) Horace Lamb… and how he found his way back to Manchester, Comptes Rendus Mécanique, 345(7), 477-487.

[2] Lamb, H. (1917) On waves in an elastic plate, Proceedings of the Royal Society of London. Series A, Containing Papers of a Mathematical and Physical Character, 93(648), pp. 114-128.

[3] Williams, E. G., Roux, P., Rupin, M. & Kuperman, W. A. (2015) Theory of multiresonant metamaterials for A0 Lamb waves, Physical Review B, 91(10), p. 104307.

[4] Zhao, D.-G., Li, Y. & Zhu, X.-F. (2015) Broadband Lamb Wave Trapping in Cellular Metamaterial Plates with Multiple Local Resonances, Scientific Reports, 5(1), p. 9376.

[5] Davey, R. (2018) An improved approach to the modelling of guided elastic waves with application to hidden tamper detection. University of Manchester

[2] Lamb, H. (1917) On waves in an elastic plate, Proceedings of the Royal Society of London. Series A, Containing Papers of a Mathematical and Physical Character, 93(648), pp. 114-128.

[3] Williams, E. G., Roux, P., Rupin, M. & Kuperman, W. A. (2015) Theory of multiresonant metamaterials for A0 Lamb waves, Physical Review B, 91(10), p. 104307.

[4] Zhao, D.-G., Li, Y. & Zhu, X.-F. (2015) Broadband Lamb Wave Trapping in Cellular Metamaterial Plates with Multiple Local Resonances, Scientific Reports, 5(1), p. 9376.

[5] Davey, R. (2018) An improved approach to the modelling of guided elastic waves with application to hidden tamper detection. University of Manchester

M is for Metamaterials

|

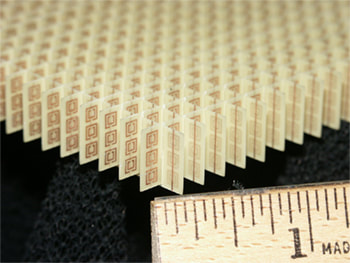

In 1968, Viktor Veselago published a paper describing the possibility of negative refraction [1]. He proposed that this would require a medium in which permittivity, ε, and magnetic permeability, μ, were negative. These properties describe how a material responds to applied electric and magnetic fields, and are positive in conventional materials. Veselago predicted that such a material could have new and interesting optical properties, such as a reverse Doppler effect. Veselago’s work went largely unnoticed until the late 1990s, when John Pendry and co-workers created two designs; a periodic mesh of very thin wires with negative ε [2, 3]; and an array of split-ring cylinders with negative μ [4]. Building on Pendry’s and Veselago’s work, Smith and co-workers made a composite structure in which both ε and μ were negative in the microwave region, resulting in a negative refractive index in that frequency range [5]. Smith called his periodoc array a

|

A split ring resonator array, part of a 'left-handed' metamaterial [6]. Credit: [email protected] (Glenn research contact), Public domain, via Wikimedia Commons

|

metamaterial, a term already being used to describe new and unusual materials. This opened up the field of metamaterials into what has become a very broad area of research.

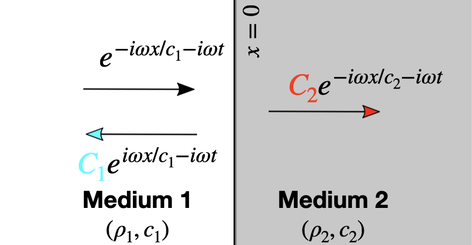

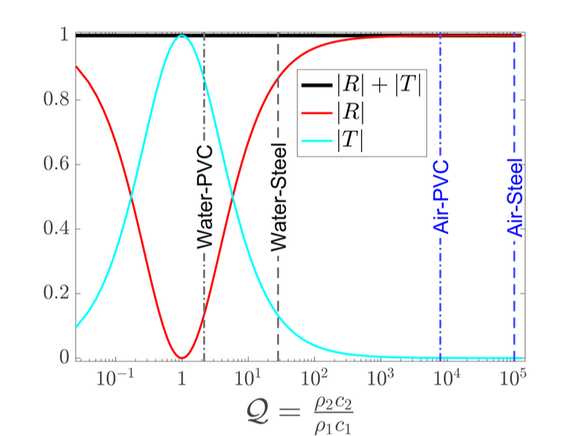

The properties of metamaterials result from their structures, rather than their chemical composition. Metamaterials are composed of subunits (sometimes referred to as meta-atoms), on a sub-wavelength scale, which are usually periodically arranged. The geometry, size and relative positions of the subunits confer properties to the bulk metamaterial that are not found in the constituent materials. The prefix meta comes from the Greek, meaning “after”, or “beyond”, and metamaterials can be considered to have properties beyond those of conventional materials. In particular, the sub-wavelength microstructures of metamaterials allow them to interact with waves in unusual ways. The early examples from Pendry and Smith, were active in the microwave region of the electromagnetic spectrum, but the field has broadened rapidly to include elastic, seismic, acoustic and mechanical metamaterials. As the wavelengths of different types of waves vary hugely in scale, so the scale and design of metamaterial subunits is equally wide-ranging.

The properties of metamaterials result from their structures, rather than their chemical composition. Metamaterials are composed of subunits (sometimes referred to as meta-atoms), on a sub-wavelength scale, which are usually periodically arranged. The geometry, size and relative positions of the subunits confer properties to the bulk metamaterial that are not found in the constituent materials. The prefix meta comes from the Greek, meaning “after”, or “beyond”, and metamaterials can be considered to have properties beyond those of conventional materials. In particular, the sub-wavelength microstructures of metamaterials allow them to interact with waves in unusual ways. The early examples from Pendry and Smith, were active in the microwave region of the electromagnetic spectrum, but the field has broadened rapidly to include elastic, seismic, acoustic and mechanical metamaterials. As the wavelengths of different types of waves vary hugely in scale, so the scale and design of metamaterial subunits is equally wide-ranging.

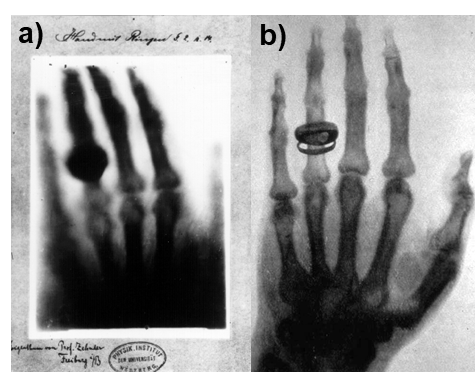

|

Although metamaterials are sometimes described as having properties not found in nature, several examples of natural materials have been proposed as metamaterials. They range in scale from the very small to the very large. For example, the wings of the morpho butterfly get their distinctive blue colour not from pigmentation, but from the interaction of light with a microstructure consisting of a grating of ribs [7]. A recent study showed that the scales on moth wings are a natural acoustic metamaterial, absorbing a broad range of ultrasound frequencies, and camouflaging the moth from echolocating bats [8]. At the larger end of the scale, periodic arrangements of trees in forests have been discussed as resonant metamaterials, attenuating seismic waves at specific frequencies between 15 and 150 Hz [9].

|

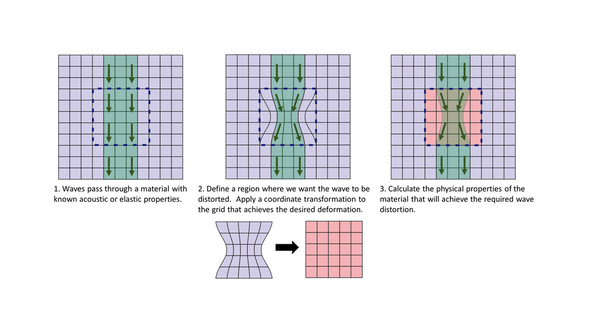

Synthetic metamaterials are equally varied. Since their unusual properties arise from their geometries and not their composition, a wide range of materials have been used to achieve them. These include paper (where the metamaterial structure is achieved by origami), Lego® bricks (whose modular nature lends itself to building periodic structures), bubbly water, and 3-D printed media [10-13]. The structural design is mathematically modelled using coordinate transformation methods. The process is outlined in the image below: the desired wave manipulation is first defined in terms of a spatial, or coordinate transformation. Then the physical properties of a material capable of achieving this wave distortion are calculated. These properties are often challenging to achieve: a feature common to many metamaterials is the possession of properties with negative values, where a positive value is the norm in a conventional material. Examples include negative Poisson’s ratio, refractive index, effective bulk modulus and mass density.

Transformation optics was pioneered by Smith and Pendry in the development of the first metamaterial cloak, which was capable of concealing a small copper cylinder from microwave frequency waves by bending the waves around the object [14]. While optical and acoustic cloaking remain key areas of research in metamaterials, many other practical uses have emerged. These include acoustic lensing and sound attenuation, and the selective elimination of unwanted frequencies

|

(the frequency band gap, discussed here). Other notable applications include a metamaterial film which exhibits radiative cooling, metamaterial biosensors, and synchrotron generation on a metasurface [15-17].

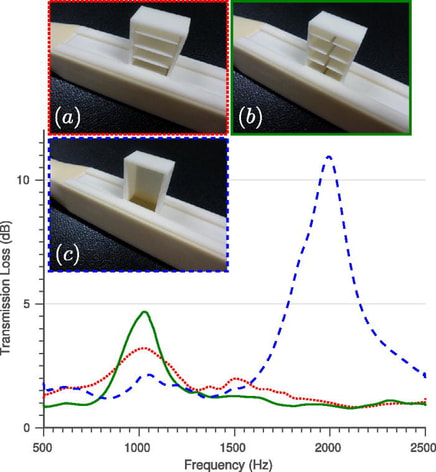

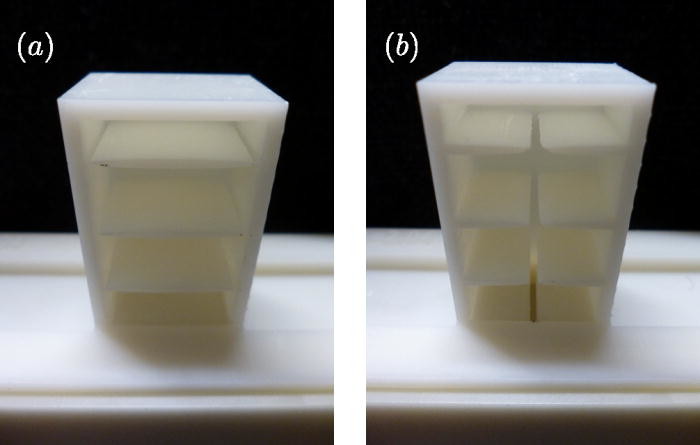

Acoustic and elastic metamaterials are a strong research theme in the Waves and Materials group. In this blog post you can read about a metamaterial that halves the effective speed of sound, while maintaining an acoustic impedance close to that of air, allowing significant space savings in noise cancellation devices (see also A is for Acoustics, above). Read more about our work in this and related fields here. |